Towards AI-Complete Question Answering: A Set of Prerequisite Toy Tasks

Visualizing and Interpreting Convolutional Neural Network

Papers

Deconvolutional Networks

- paper: http://www.matthewzeiler.com/pubs/cvpr2010/cvpr2010.pdf

- video: https://ipam.wistia.com/medias/zd0qnekkwc

- presentation: https://mathinstitutes.org/videos/videos/3295

Visualizing and Understanding Convolutional Network

- intro: ECCV 2014

- arxiv: http://arxiv.org/abs/1311.2901

- slides: https://courses.cs.washington.edu/courses/cse590v/14au/cse590v_dec5_DeepVis.pdf

- slides: http://videolectures.net/site/normal_dl/tag=921098/eccv2014_zeiler_convolutional_networks_01.pdf

- video: http://videolectures.net/eccv2014_zeiler_convolutional_networks/

- chs: http://blog.csdn.net/kklots/article/details/17136059

- github: https://github.com/piergiaj/caffe-deconvnet

Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps

- intro: ICLR 2014 workshop

- arxiv: http://arxiv.org/abs/1312.6034

- github: https://github.com/yasunorikudo/vis-cnn

Understanding Deep Image Representations by Inverting Them

deepViz: Visualizing Convolutional Neural Networks for Image Classification

- paper: http://vis.berkeley.edu/courses/cs294-10-fa13/wiki/images/f/fd/DeepVizPaper.pdf

- github: https://github.com/bruckner/deepViz

Inverting Convolutional Networks with Convolutional Networks

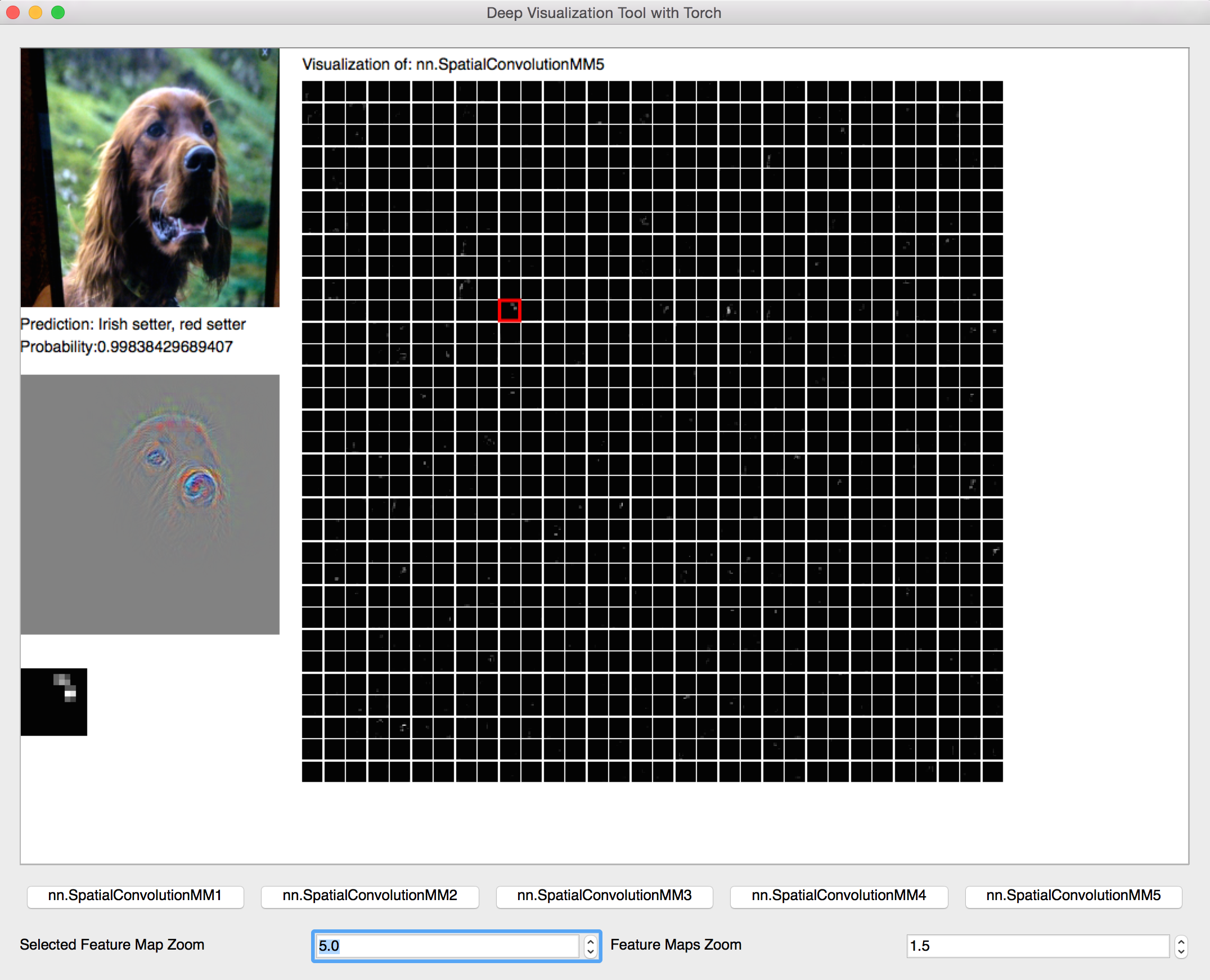

Understanding Neural Networks Through Deep Visualization

- project page: http://yosinski.com/deepvis

- arxiv: http://arxiv.org/abs/1506.06579

- github: https://github.com/yosinski/deep-visualization-toolbox

Visualizing Higher-Layer Features of a Deep Network

Generative Modeling of Convolutional Neural Networks

- project page: http://www.stat.ucla.edu/~yang.lu/Project/generativeCNN/main.html

- arxiv: http://arxiv.org/abs/1412.6296

- code: http://www.stat.ucla.edu/~yang.lu/Project/generativeCNN/doc/caffe-generative.zip

Understanding Intra-Class Knowledge Inside CNN

Learning FRAME Models Using CNN Filters for Knowledge Visualization

- project page: http://www.stat.ucla.edu/~yang.lu/project/deepFrame/main.html

- arxiv: http://arxiv.org/abs/1509.08379

- code: http://www.stat.ucla.edu/~yang.lu/project/deepFrame/doc/code.zip

Convergent Learning: Do different neural networks learn the same representations?

- intro: ICLR 2016

- arxiv: http://arxiv.org/abs/1511.07543

- github: https://github.com/yixuanli/convergent_learning

- video: http://videolectures.net/iclr2016_yosinski_convergent_learning/

Visualizing and Understanding Deep Texture Representations

- homepage: http://vis-www.cs.umass.edu/texture/

- arxiv: http://arxiv.org/abs/1511.05197

- paper: https://people.cs.umass.edu/~smaji/papers/texture-cvpr16.pdf

Visualizing Deep Convolutional Neural Networks Using Natural Pre-Images

An Interactive Node-Link Visualization of Convolutional Neural Networks

- homepage: http://scs.ryerson.ca/~aharley/vis/

- code: http://scs.ryerson.ca/~aharley/vis/source.zip

- demo: http://scs.ryerson.ca/~aharley/vis/conv/

- review: http://www.popsci.com/gaze-inside-mind-artificial-intelligence

Learning Deep Features for Discriminative Localization

- project page: http://cnnlocalization.csail.mit.edu/

- arxiv: http://arxiv.org/abs/1512.04150

- github: https://github.com/metalbubble/CAM

- blog: http://jacobcv.blogspot.com/2016/08/class-activation-maps-in-keras.html

- github: https://github.com/jacobgil/keras-cam

Multifaceted Feature Visualization: Uncovering the Different Types of Features Learned By Each Neuron in Deep Neural Networks

- intro: Visualization for Deep Learning workshop. ICML 2016

- arxiv: http://arxiv.org/abs/1602.03616

- homepage: http://www.evolvingai.org/nguyen-yosinski-clune-2016-multifaceted-feature

- github: https://github.com/Evolving-AI-Lab/mfv

A New Method to Visualize Deep Neural Networks

A Taxonomy and Library for Visualizing Learned Features in Convolutional Neural Networks

VisualBackProp: visualizing CNNs for autonomous driving

VisualBackProp: efficient visualization of CNNs

Grad-CAM: Why did you say that? Visual Explanations from Deep Networks via Gradient-based Localization

Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization

- arxiv: https://arxiv.org/abs/1610.02391

- github: https://github.com/ramprs/grad-cam/

- github(Keras): https://github.com/jacobgil/keras-grad-cam

- github(TensorFlow): https://github.com/Ankush96/grad-cam.tensorflow

Grad-CAM: Why did you say that?

- intro: NIPS 2016 Workshop on Interpretable Machine Learning in Complex Systems

- intro: extended abstract version of arXiv:1610.02391

- arxiv: https://arxiv.org/abs/1611.07450

Visualizing Residual Networks

- intro: UC Berkeley CS 280 final project report

- arxiv: https://arxiv.org/abs/1701.02362

Visualizing Deep Neural Network Decisions: Prediction Difference Analysis

- intro: University of Amsterdam & Canadian Institute of Advanced Research & Vrije Universiteit Brussel

- intro: ICLR 2017

- arxiv: https://arxiv.org/abs/1702.04595

- github: https://github.com/lmzintgraf/DeepVis-PredDiff

ActiVis: Visual Exploration of Industry-Scale Deep Neural Network Models

- intro: Georgia Tech & Facebook

- arxiv: https://arxiv.org/abs/1704.01942

Picasso: A Neural Network Visualizer

- arxiv: https://arxiv.org/abs/1705.05627

- github: https://github.com/merantix/picasso

- blog: https://medium.com/merantix/picasso-a-free-open-source-visualizer-for-cnns-d8ed3a35cfc5

CNN Fixations: An unraveling approach to visualize the discriminative image regions

A Forward-Backward Approach for Visualizing Information Flow in Deep Networks

- intro: NIPS 2017 Symposium on Interpretable Machine Learning. Iowa State University

- arxiv: https://arxiv.org/abs/1711.06221

Using KL-divergence to focus Deep Visual Explanation

https://arxiv.org/abs/1711.06431

An Introduction to Deep Visual Explanation

- intro: NIPS 2017 - Workshop Interpreting, Explaining and Visualizing Deep Learning

- arxiv: https://arxiv.org/abs/1711.09482

Visual Explanation by Interpretation: Improving Visual Feedback Capabilities of Deep Neural Networks

https://arxiv.org/abs/1712.06302

Visualizing the Loss Landscape of Neural Nets

- intro: University of Maryland & United States Naval Academy

- arxiv: https://arxiv.org/abs/1712.09913

Visualizing Deep Similarity Networks

https://arxiv.org/abs/1901.00536

Interpreting Convolutional Neural Networks

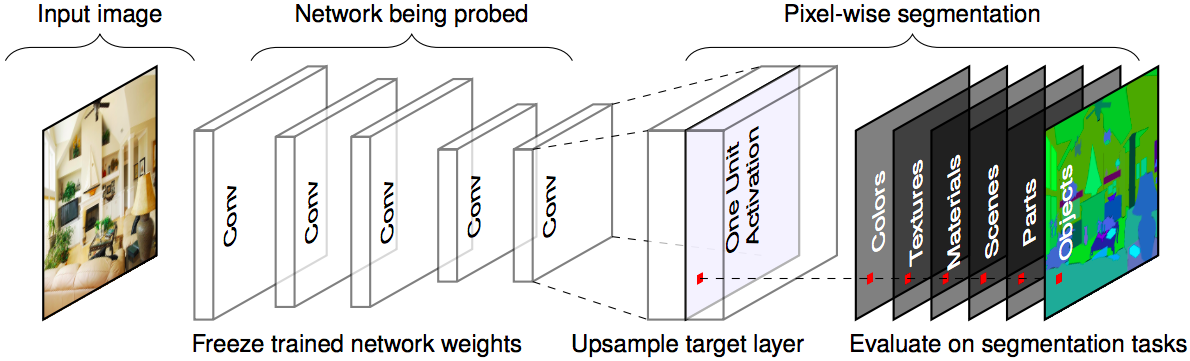

Network Dissection: Quantifying Interpretability of Deep Visual Representations

- intro: CVPR 2017 oral. MIT

- project page: http://netdissect.csail.mit.edu/

- arxiv: https://arxiv.org/abs/1704.05796

- github: https://github.com/CSAILVision/NetDissect

Interpreting Deep Visual Representations via Network Dissection

https://arxiv.org/abs/1711.05611

Methods for Interpreting and Understanding Deep Neural Networks

- intro: Technische Universit¨at Berlin & Fraunhofer Heinrich Hertz Institute

- arxiv: https://arxiv.org/abs/1706.07979

SVCCA: Singular Vector Canonical Correlation Analysis for Deep Learning Dynamics and Interpretability

- intro: NIPS 2017. Google Brain & Uber AI Labs

- arxiv: https://arxiv.org/abs/1706.05806

- github: https://github.com/google/svcca/

- blog: https://research.googleblog.com/2017/11/interpreting-deep-neural-networks-with.html

Towards Interpretable Deep Neural Networks by Leveraging Adversarial Examples

- intro: Tsinghua University

- arxiv: https://arxiv.org/abs/1708.05493

Interpretable Convolutional Neural Networks

https://arxiv.org/abs/1710.00935

Interpreting Convolutional Neural Networks Through Compression

- intro: NIPS 2017 Symposium on Interpretable Machine Learning

- arxiv: https://arxiv.org/abs/1711.02329

Interpreting Deep Neural Networks

Interpreting CNNs via Decision Trees

https://arxiv.org/abs/1802.00121

Visual Interpretability for Deep Learning: a Survey

https://arxiv.org/abs/1802.00614

Interpreting Deep Classifier by Visual Distillation of Dark Knowledge

- intro: University of Edinburgh & Huawei Research America

- arxiv: https://arxiv.org/abs/1803.04042

How convolutional neural network see the world - A survey of convolutional neural network visualization methods

- intro: Mathematical Foundations of Computing. George Mason University & Clarkson University

- arxiv: https://arxiv.org/abs/1804.11191

Understanding Regularization to Visualize Convolutional Neural Networks

- intro: Konica Minolta Laboratory Europe & Technical University of Munich

- arxiv: https://arxiv.org/abs/1805.00071

Deeper Interpretability of Deep Networks

- intro: University of Glasgow & University of Oxford & University of California

- arxiv: https://arxiv.org/abs/1811.07807

Interpretable CNNs

https://arxiv.org/abs/1901.02413

Explaining AlphaGo: Interpreting Contextual Effects in Neural Networks

https://arxiv.org/abs/1901.02184

Interpretable BoW Networks for Adversarial Example Detection

https://arxiv.org/abs/1901.02229

Deep Features Analysis with Attention Networks

- intro: In AAAI-19 Workshop on Network Interpretability for Deep Learning

- arxiv: https://arxiv.org/abs/1901.10042

Understanding Neural Networks via Feature Visualization: A survey

- intro: A book chapter in an Interpretable ML book (http://www.interpretable-ml.org/book/)

- arxiv: https://arxiv.org/abs/1904.08939

Explaining Neural Networks via Perturbing Important Learned Features

https://arxiv.org/abs/1911.11081

Interpreting Adversarially Trained Convolutional Neural Networks

- intro: ICML 2019

- arxiv: https://arxiv.org/abs/1905.09797

- github: https://github.com/PKUAI26/AT-CNN

Projects

Interactive Deep Neural Net Hallucinations

- project page: http://317070.github.io/Dream/

- github: https://github.com/317070/Twitch-plays-LSD-neural-net

torch-visbox

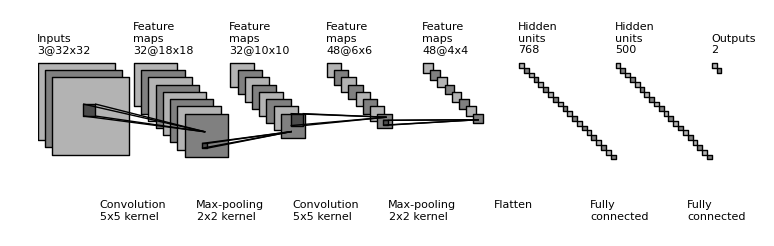

draw_convnet: Python script for illustrating Convolutional Neural Network (ConvNet)

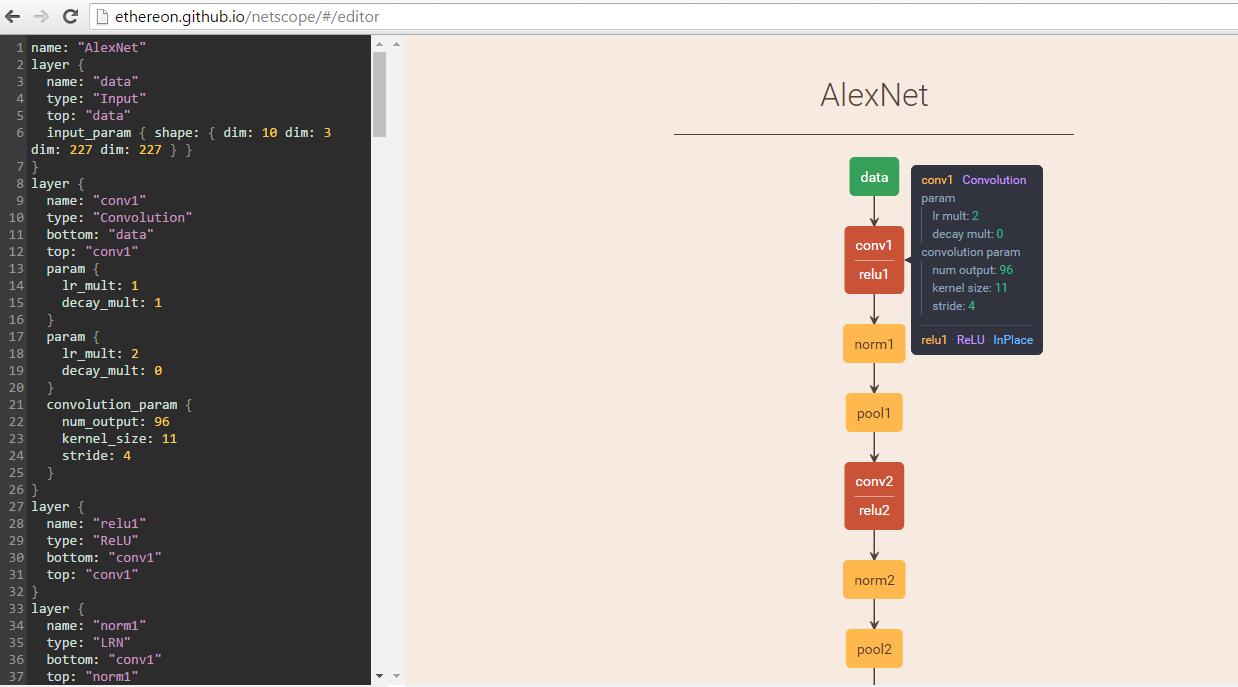

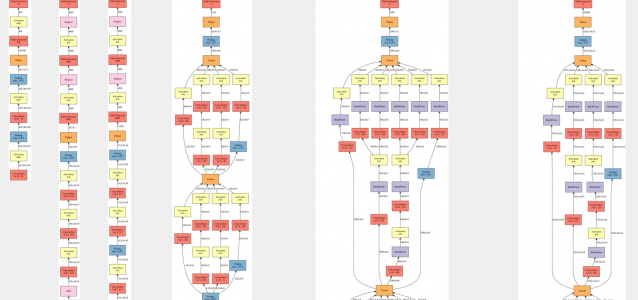

Caffe prototxt visualization

- intro: Recommended by Kaiming He

- github: https://github.com/ethereon/netscope

- quickstart: http://ethereon.github.io/netscope/quickstart.html

- demo: http://ethereon.github.io/netscope/#/editor

Keras Visualization Toolkit

mNeuron: A Matlab Plugin to Visualize Neurons from Deep Models

- project page: http://vision03.csail.mit.edu/cnn_art/

- github: https://github.com/donglaiw/mNeuron

cnnvis-pytorch

- intro: visualization of CNN in PyTorch

- github: https://github.com/leelabcnbc/cnnvis-pytorch

VisualDL

- intro: A platform to visualize the deep learning process

- homepage: http://visualdl.paddlepaddle.org/

- github: https://github.com/PaddlePaddle/VisualDL

Blogs

“Visualizing GoogLeNet Classes”

http://auduno.com/post/125362849838/visualizing-googlenet-classes

Visualizing CNN architectures side by side with mxnet

How convolutional neural networks see the world: An exploration of convnet filters with Keras

- blog: http://blog.keras.io/how-convolutional-neural-networks-see-the-world.html

- github: https://github.com/fchollet/keras/blob/master/examples/conv_filter_visualization.py

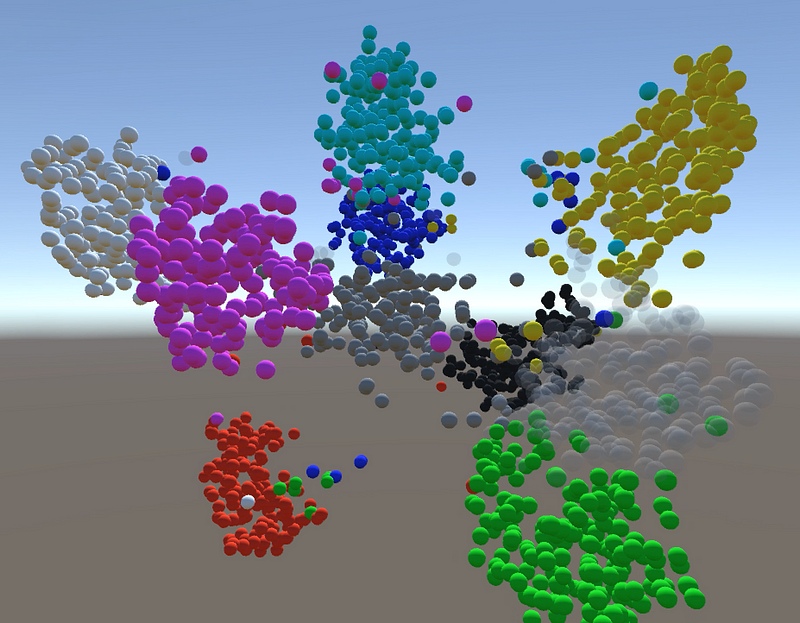

Visualizing Deep Learning with t-SNE (Tutorial and Video)

- blog: https://medium.com/@awjuliani/visualizing-deep-learning-with-t-sne-tutorial-and-video-e7c59ee4080c#.ubhijafw7

- github: https://github.com/awjuliani/3D-TSNE

Peeking inside Convnets

Visualizing Features from a Convolutional Neural Network

- blog: http://kvfrans.com/visualizing-features-from-a-convolutional-neural-network/

- github: https://github.com/kvfrans/feature-visualization

Visualizing Deep Neural Networks Classes and Features

http://ankivil.com/visualizing-deep-neural-networks-classes-and-features/

Visualizing parts of Convolutional Neural Networks using Keras and Cats

- blog: https://hackernoon.com/visualizing-parts-of-convolutional-neural-networks-using-keras-and-cats-5cc01b214e59#.bt6bb13dk

- github: https://github.com/erikreppel/visualizing_cnns

Visualizing convolutional neural networks

- intro: How to build convolutional neural networks from scratch w/ Tensorflow

- blog: https://www.oreilly.com/ideas/visualizing-convolutional-neural-networks

- github: https://github.com//wagonhelm/Visualizing-Convnets/

Tools

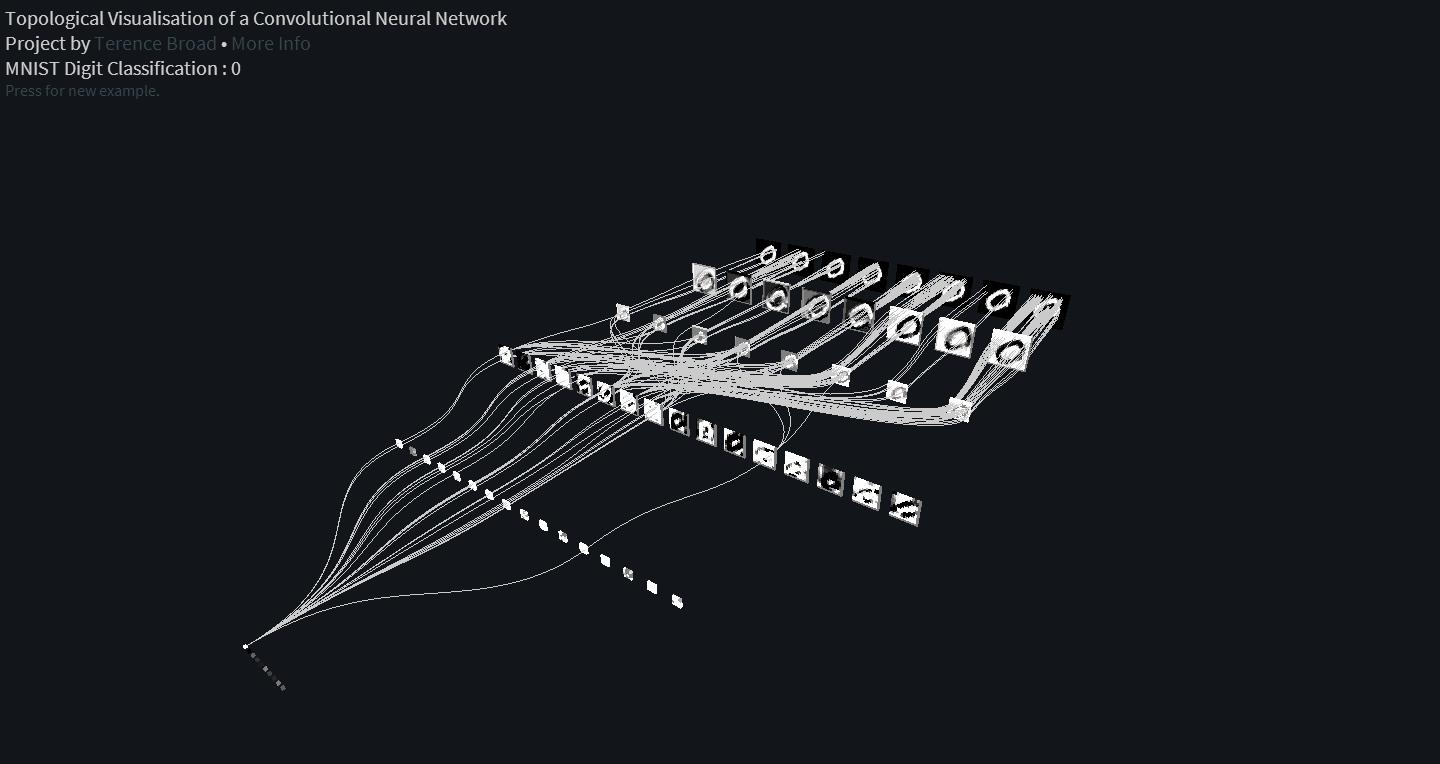

Topological Visualisation of a Convolutional Neural Network

http://terencebroad.com/convnetvis/vis.html

Visualization of Places-CNN and ImageNet CNN

- homepage: http://places.csail.mit.edu/visualizationCNN.html

- DrawCNN: http://people.csail.mit.edu/torralba/research/drawCNN/drawNet.html

Visualization of a feed forward Neural Network using MNIST dataset

- homepage: http://nn-mnist.sennabaum.com/

- github: https://github.com/csenn/nn-visualizer

CNNVis: Towards Better Analysis of Deep Convolutional Neural Networks.

http://shixialiu.com/publications/cnnvis/demo/

Quiver: Interactive convnet features visualization for Keras

- homepage: https://jakebian.github.io/quiver/

- github: https://github.com/jakebian/quiver

Netron

- intro: Visualizer for deep learning and machine learning models

- github: https://github.com/lutzroeder/netron

Tracking

Learning A Deep Compact Image Representation for Visual Tracking

- intro: NIPS 2013

- intro: DLT

- project page: http://winsty.net/dlt.html

Hierarchical Convolutional Features for Visual Tracking

- intro: ICCV 2015

- project page: https://sites.google.com/site/jbhuang0604/publications/cf2

- github: https://github.com/jbhuang0604/CF2

Robust Visual Tracking via Convolutional Networks

- arxiv: http://arxiv.org/abs/1501.04505

- paper: http://kaihuazhang.net/CNT.pdf

- code: http://kaihuazhang.net/CNT_matlab.rar

Transferring Rich Feature Hierarchies for Robust Visual Tracking

- intro: SO-DLT

- arxiv: http://arxiv.org/abs/1501.04587

- slides: http://valse.mmcheng.net/ftp/20150325/RVT.pptx

Learning Multi-Domain Convolutional Neural Networks for Visual Tracking

- intro: The Winner of The VOT2015 Challenge

- keywords: Multi-Domain Network (MDNet)

- homepage: http://cvlab.postech.ac.kr/research/mdnet/

- arxiv: http://arxiv.org/abs/1510.07945

- github: https://github.com/HyeonseobNam/MDNet

RATM: Recurrent Attentive Tracking Model

Understanding and Diagnosing Visual Tracking Systems

- intro: ICCV 2015

- project page: http://winsty.net/tracker_diagnose.html

- paper: http://winsty.net/papers/diagnose.pdf

- code(Matlab): http://120.52.72.43/winsty.net/c3pr90ntcsf0/diagnose/diagnose_code.zip

Recurrently Target-Attending Tracking

- paper: http://www.cv-foundation.org/openaccess/content_cvpr_2016/html/Cui_Recurrently_Target-Attending_Tracking_CVPR_2016_paper.html

- paper: http://www.cv-foundation.org/openaccess/content_cvpr_2016/papers/Cui_Recurrently_Target-Attending_Tracking_CVPR_2016_paper.pdf

Visual Tracking with Fully Convolutional Networks

- intro: ICCV 2015

- paper: http://202.118.75.4/lu/Paper/ICCV2015/iccv15_lijun.pdf

- github: https://github.com/scott89/FCNT

Deep Tracking: Seeing Beyond Seeing Using Recurrent Neural Networks

- intro: AAAI 2016

- arxiv: http://arxiv.org/abs/1602.00991

- github: https://github.com/pondruska/DeepTracking

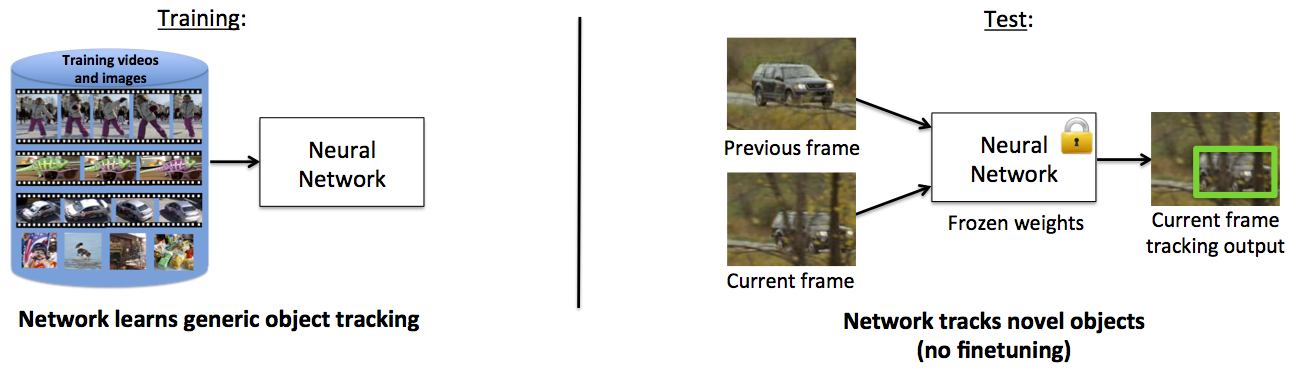

Learning to Track at 100 FPS with Deep Regression Networks

- intro: ECCV 2015

- intro: GOTURN: Generic Object Tracking Using Regression Networks

- project page: http://davheld.github.io/GOTURN/GOTURN.html

- arxiv: http://arxiv.org/abs/1604.01802

- github: https://github.com/davheld/GOTURN

Learning by tracking: Siamese CNN for robust target association

Fully-Convolutional Siamese Networks for Object Tracking

- intro: ECCV 2016

- intro: State-of-the-art performance in arbitrary object tracking at 50-100 FPS with Fully Convolutional Siamese networks

- project page: http://www.robots.ox.ac.uk/~luca/siamese-fc.html

- arxiv: http://arxiv.org/abs/1606.09549

- github(official): https://github.com/bertinetto/siamese-fc

- github(official): https://github.com/torrvision/siamfc-tf

- valse-video: http://www.iqiyi.com/w_19ruirwrel.html#vfrm=8-8-0-1

Hedged Deep Tracking

- project page(paper+code): https://sites.google.com/site/yuankiqi/hdt

- paper: https://docs.google.com/viewer?a=v&pid=sites&srcid=ZGVmYXVsdGRvbWFpbnx5dWFua2lxaXxneDoxZjc2MmYwZGIzNjFhYTRl

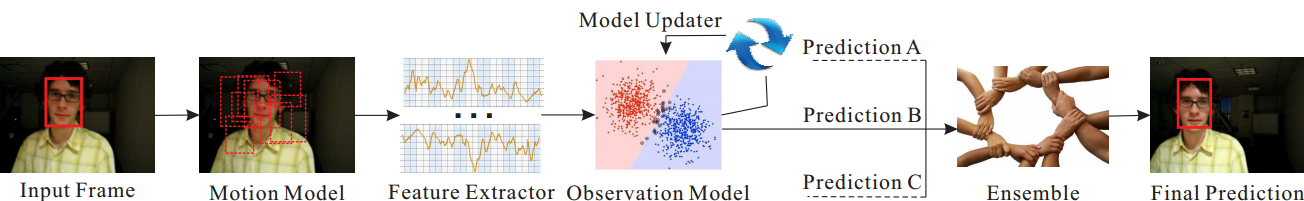

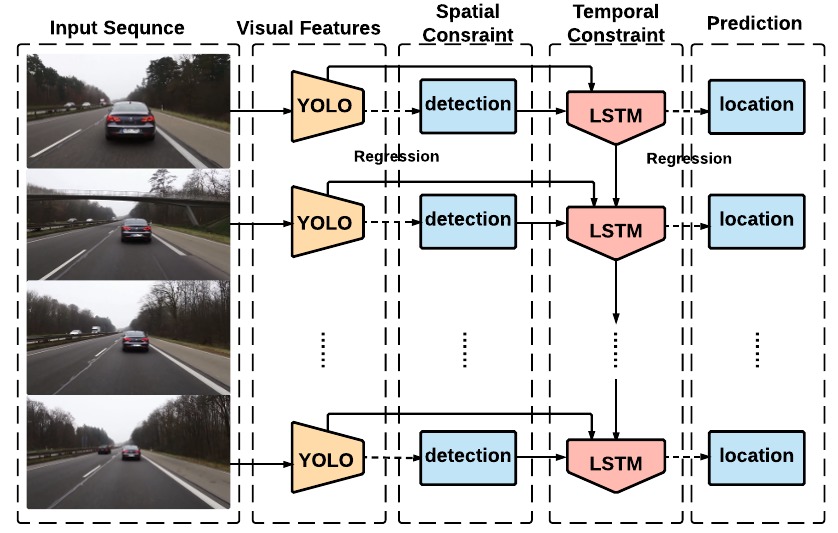

Spatially Supervised Recurrent Convolutional Neural Networks for Visual Object Tracking

- intro: ROLO is short for Recurrent YOLO, aimed at simultaneous object detection and tracking

- project page: http://guanghan.info/projects/ROLO/

- arxiv: http://arxiv.org/abs/1607.05781

- github: https://github.com/Guanghan/ROLO

Visual Tracking via Shallow and Deep Collaborative Model

Beyond Correlation Filters: Learning Continuous Convolution Operators for Visual Tracking

![]()

- intro: ECCV 2016

- intro: OTB-2015 (+5.1% in mean OP), Temple-Color (+4.6% in mean OP), and VOT2015 (20% relative reduction in failure rate)

- keywords: Continuous Convolution Operator Tracker (C-COT)

- project page: http://www.cvl.isy.liu.se/research/objrec/visualtracking/conttrack/index.html

- arxiv: http://arxiv.org/abs/1608.03773

- github(MATLAB): https://github.com/martin-danelljan/Continuous-ConvOp

Unsupervised Learning from Continuous Video in a Scalable Predictive Recurrent Network

- keywords: Predictive Vision Model (PVM)

- arxiv: http://arxiv.org/abs/1607.06854

- github: https://github.com/braincorp/PVM

Modeling and Propagating CNNs in a Tree Structure for Visual Tracking

Robust Scale Adaptive Kernel Correlation Filter Tracker With Hierarchical Convolutional Features

Deep Tracking on the Move: Learning to Track the World from a Moving Vehicle using Recurrent Neural Networks

OTB Results: visual tracker benchmark results

Convolutional Regression for Visual Tracking

Semantic tracking: Single-target tracking with inter-supervised convolutional networks

SANet: Structure-Aware Network for Visual Tracking

ECO: Efficient Convolution Operators for Tracking

![]()

- intro: CVPR 2017

- project page: http://www.cvl.isy.liu.se/research/objrec/visualtracking/ecotrack/index.html

- arxiv: https://arxiv.org/abs/1611.09224

- github: https://github.com/martin-danelljan/ECO

Dual Deep Network for Visual Tracking

Deep Motion Features for Visual Tracking

- intro: ICPR 2016. Best paper award in the “Computer Vision and Robot Vision” track

- arxiv: https://arxiv.org/abs/1612.06615

Globally Optimal Object Tracking with Fully Convolutional Networks

Robust and Real-time Deep Tracking Via Multi-Scale Domain Adaptation

- arxiv: https://arxiv.org/abs/1701.00561

- bitbucket: https://bitbucket.org/xinke_wang/msdat

Tracking The Untrackable: Learning To Track Multiple Cues with Long-Term Dependencies

Large Margin Object Tracking with Circulant Feature Maps

- intro: CVPR 2017

- intro: The experimental results demonstrate that the proposed tracker performs superiorly against several state-of-the-art algorithms on the challenging benchmark sequences while runs at speed in excess of 80 frames per secon

- arxiv: https://arxiv.org/abs/1703.05020

- notes: https://zhuanlan.zhihu.com/p/25761718

DCFNet: Discriminant Correlation Filters Network for Visual Tracking

End-to-end representation learning for Correlation Filter based tracking

- intro: CVPR 2017. University of Oxford

- intro: Training a Correlation Filter end-to-end allows lightweight networks of 2 layers (600 kB) to achieve state-of-the-art performance in tracking, at high-speed.

- project page: http://www.robots.ox.ac.uk/~luca/cfnet.html

- arxiv: https://arxiv.org/abs/1704.06036

- gtihub: https://github.com/bertinetto/cfnet

Context-Aware Correlation Filter Tracking

- intro: CVPR 2017 Oral

- project page: https://ivul.kaust.edu.sa/Pages/pub-ca-cf-tracking.aspx

- paper: https://ivul.kaust.edu.sa/Documents/Publications/2017/Context-Aware%20Correlation%20Filter%20Tracking.pdf

- github: https://github.com/thias15/Context-Aware-CF-Tracking

Robust Multi-view Pedestrian Tracking Using Neural Networks

https://arxiv.org/abs/1704.06370

Re3 : Real-Time Recurrent Regression Networks for Object Tracking

- intro: University of Washington

- arxiv: https://arxiv.org/abs/1705.06368

- demo: https://www.youtube.com/watch?v=PC0txGaYz2I

Robust Tracking Using Region Proposal Networks

https://arxiv.org/abs/1705.10447

Hierarchical Attentive Recurrent Tracking

- intro: NIPS 2017. University of Oxford

- arxiv: https://arxiv.org/abs/1706.09262

- github: https://github.com/akosiorek/hart

- results: https://youtu.be/Vvkjm0FRGSs

Siamese Learning Visual Tracking: A Survey

https://arxiv.org/abs/1707.00569

Robust Visual Tracking via Hierarchical Convolutional Features

- project page: https://sites.google.com/site/chaoma99/hcft-tracking

- arxiv: https://arxiv.org/abs/1707.03816

- github: https://github.com/chaoma99/HCFTstar

CREST: Convolutional Residual Learning for Visual Tracking

- intro: ICCV 2017

- project page: http://www.cs.cityu.edu.hk/~yibisong/iccv17/index.html

- arxiv: https://arxiv.org/abs/1708.00225

- github: https://github.com/ybsong00/CREST-Release

Learning Policies for Adaptive Tracking with Deep Feature Cascades

- intro: ICCV 2017 Spotlight

- arxiv: https://arxiv.org/abs/1708.02973

Recurrent Filter Learning for Visual Tracking

- intro: ICCV 2017 Workshop on VOT

- arxiv: https://arxiv.org/abs/1708.03874

Correlation Filters with Weighted Convolution Responses

- intro: ICCV 2017 workshop. 5th visual object tracking(VOT) tracker CFWCR

- paper: http://openaccess.thecvf.com/content_ICCV_2017_workshops/papers/w28/He_Correlation_Filters_With_ICCV_2017_paper.pdf

- github: https://github.com/he010103/CFWCR

Semantic Texture for Robust Dense Tracking

https://arxiv.org/abs/1708.08844

Learning Multi-frame Visual Representation for Joint Detection and Tracking of Small Objects

Differentiating Objects by Motion: Joint Detection and Tracking of Small Flying Objects

https://arxiv.org/abs/1709.04666

Tracking Persons-of-Interest via Unsupervised Representation Adaptation

- intro: Northwestern Polytechnical University & Virginia Tech & Hanyang University

- keywords: Multi-face tracking

- project page: http://vllab1.ucmerced.edu/~szhang/FaceTracking/

- arxiv: https://arxiv.org/abs/1710.02139

End-to-end Flow Correlation Tracking with Spatial-temporal Attention

https://arxiv.org/abs/1711.01124

UCT: Learning Unified Convolutional Networks for Real-time Visual Tracking

- intro: ICCV 2017 Workshops

- arxiv: https://arxiv.org/abs/1711.04661

Pixel-wise object tracking

https://arxiv.org/abs/1711.07377

MAVOT: Memory-Augmented Video Object Tracking

https://arxiv.org/abs/1711.09414

Learning Hierarchical Features for Visual Object Tracking with Recursive Neural Networks

https://arxiv.org/abs/1801.02021

Parallel Tracking and Verifying

https://arxiv.org/abs/1801.10496

Saliency-Enhanced Robust Visual Tracking

https://arxiv.org/abs/1802.02783

A Twofold Siamese Network for Real-Time Object Tracking

- intro: CVPR 2018

- arxiv: https://arxiv.org/abs/1802.08817

Learning Dynamic Memory Networks for Object Tracking

https://arxiv.org/abs/1803.07268

Context-aware Deep Feature Compression for High-speed Visual Tracking

- intro: CVPR 2018

- arxiv: https://arxiv.org/abs/1803.10537

VITAL: VIsual Tracking via Adversarial Learning

- intro: CVPR 2018 Spotlight

- arixv: https://arxiv.org/abs/1804.04273

Unveiling the Power of Deep Tracking

https://arxiv.org/abs/1804.06833

A Novel Low-cost FPGA-based Real-time Object Tracking System

- intro: ASICON 2017

- arxiv: https://arxiv.org/abs/1804.05535

MV-YOLO: Motion Vector-aided Tracking by Semantic Object Detection

https://arxiv.org/abs/1805.00107

Information-Maximizing Sampling to Promote Tracking-by-Detection

https://arxiv.org/abs/1806.02523

Instance Segmentation and Tracking with Cosine Embeddings and Recurrent Hourglass Networks

- intro: MICCAI 2018

- arxiv: https://arxiv.org/abs/1806.02070

Stochastic Channel Decorrelation Network and Its Application to Visual Tracking

https://arxiv.org/abs/1807.01103

Fast Dynamic Convolutional Neural Networks for Visual Tracking

https://arxiv.org/abs/1807.03132

DeepTAM: Deep Tracking and Mapping

https://arxiv.org/abs/1808.01900

Distractor-aware Siamese Networks for Visual Object Tracking

- intro: ECCV 2018

- keywords: DaSiamRPN

- arxiv: https://arxiv.org/abs/1808.06048

- github: https://github.com/foolwood/DaSiamRPN

Multi-Branch Siamese Networks with Online Selection for Object Tracking

- intro: ISVC 2018 oral

- arxiv: https://arxiv.org/abs/1808.07349

Real-Time MDNet

- intro: ECCV 2018

- arxiv: https://arxiv.org/abs/1808.08834

Towards a Better Match in Siamese Network Based Visual Object Tracker

- intro: ECCV Visual Object Tracking Challenge Workshop VOT2018

- arxiv: https://arxiv.org/abs/1809.01368

DensSiam: End-to-End Densely-Siamese Network with Self-Attention Model for Object Tracking

- intro: ISVC 2018

- arxiv: https://arxiv.org/abs/1809.02714

Deformable Object Tracking with Gated Fusion

https://arxiv.org/abs/1809.10417

Deep Attentive Tracking via Reciprocative Learning

- intro: NIPS 2018

- project page: https://ybsong00.github.io/nips18_tracking/index

- arxiv: https://arxiv.org/abs/1810.03851

- github: https://github.com/shipubupt/NIPS2018

Online Visual Robot Tracking and Identification using Deep LSTM Networks

- intro: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, Canada, 2017. IROS RoboCup Best Paper Award

- arxiv: https://arxiv.org/abs/1810.04941

Detect or Track: Towards Cost-Effective Video Object Detection/Tracking

- intro: AAAI 2019

- arxiv: https://arxiv.org/abs/1811.05340

Deep Siamese Networks with Bayesian non-Parametrics for Video Object Tracking

https://arxiv.org/abs/1811.07386

Fast Online Object Tracking and Segmentation: A Unifying Approach

- intro: CVPR 2019

- preject page: http://www.robots.ox.ac.uk/~qwang/SiamMask/

- arxiv: https://arxiv.org/abs/1812.05050

- github: https://github.com/foolwood/SiamMask

Siamese Cascaded Region Proposal Networks for Real-Time Visual Tracking

- intro: Temple University

- arxiv: https://arxiv.org/abs/1812.06148

Handcrafted and Deep Trackers: A Review of Recent Object Tracking Approaches

https://arxiv.org/abs/1812.07368

SiamRPN++: Evolution of Siamese Visual Tracking with Very Deep Networks

https://arxiv.org/abs/1812.11703

Deeper and Wider Siamese Networks for Real-Time Visual Tracking

https://arxiv.org/abs/1901.01660

SiamVGG: Visual Tracking using Deeper Siamese Networks

https://arxiv.org/abs/1902.02804

TrackNet: Simultaneous Object Detection and Tracking and Its Application in Traffic Video Analysis

https://arxiv.org/abs/1902.01466

Target-Aware Deep Tracking

- intro: CVPR 2019

- intro: 1Harbin Institute of Technology & Shanghai Jiao Tong University & Tencent AI Lab & University of California & Google Cloud AI

- arxiv: https://arxiv.org/abs/1904.01772

Unsupervised Deep Tracking

- intro: CVPR 2019

- intro: USTC & Tencent AI Lab & Shanghai Jiao Tong University

- arxiv: https://arxiv.org/abs/1904.01828

- github: https://github.com/594422814/UDT

- github: https://github.com/594422814/UDT_pytorch

Generic Multiview Visual Tracking

https://arxiv.org/abs/1904.02553

SPM-Tracker: Series-Parallel Matching for Real-Time Visual Object Tracking

- intro: CVPR 2019

- arxiv: https://arxiv.org/abs/1904.04452

A Strong Feature Representation for Siamese Network Tracker

https://arxiv.org/abs/1907.07880

Visual Tracking via Dynamic Memory Networks

- intro: TPAMI 2019

- arxiv: https://arxiv.org/abs/1907.07613

Multi-Adapter RGBT Tracking

Teacher-Students Knowledge Distillation for Siamese Trackers

https://arxiv.org/abs/1907.10586

Tell Me What to Track

- intro: Boston University & Horizon Robotics & University of Chinese Academy of Sciences

- arxiv: https://arxiv.org/abs/1907.11751

Learning to Track Any Object

- intro: ICCV 2019 Holistic Video Understanding workshop

- arxiv: https://arxiv.org/abs/1910.11844

ROI Pooled Correlation Filters for Visual Tracking

- intro: CVPR 2019

- arxiv: https://arxiv.org/abs/1911.01668

D3S – A Discriminative Single Shot Segmentation Tracker

- intro: CVPR 2020

- arxiv: https://arxiv.org/abs/1911.08862

- github(PyTorch): https://github.com/alanlukezic/d3s

Visual Tracking by TridentAlign and Context Embedding

Transformer Tracking

- intro: CVPR 2021

- intro: Dalian University of Technology & Peng Cheng Laboratory & Remark AI

- arxiv: https://arxiv.org/abs/2103.15436

- github: https://github.com/chenxin-dlut/TransT

Face Tracking

Mobile Face Tracking: A Survey and Benchmark

https://arxiv.org/abs/1805.09749

Multi-Object Tracking (MOT)

Simple Online and Realtime Tracking

- intro: ICIP 2016

- arxiv: https://arxiv.org/abs/1602.00763

- github: https://github.com/abewley/sort

Simple Online and Realtime Tracking with a Deep Association Metric

- intro: ICIP 2017

- arxiv: https://arxiv.org/abs/1703.07402

- mot challenge: https://motchallenge.net/tracker/DeepSORT_2

- github(official, Python): https://github.com/nwojke/deep_sort

- github(C++): https://github.com/oylz/ds

StrongSORT: Make DeepSORT Great Again

- intro: Beijing University of Posts and Telecommunications & Xidian University

- arxiv: https://arxiv.org/abs/2202.13514

Observation-Centric SORT: Rethinking SORT for Robust Multi-Object Tracking

- intro: Carnegie Mellon University & The Chinese University of Hong Kong & Shanghai AI Laboratory

- arxiv: https://arxiv.org/abs/2203.14360

- github: https://github.com/noahcao/OC_SORT

BoT-SORT: Robust Associations Multi-Pedestrian Tracking

- intro: Tel-Aviv University

- arxiv: https://arxiv.org/abs/2206.14651

Virtual Worlds as Proxy for Multi-Object Tracking Analysis

- arxiv: http://arxiv.org/abs/1605.06457

- dataset(Virtual KITTI): http://www.xrce.xerox.com/Research-Development/Computer-Vision/Proxy-Virtual-Worlds

Multi-Class Multi-Object Tracking using Changing Point Detection

- intro: changing point detection, entity transition, object detection from video, convolutional neural network

- arxiv: http://arxiv.org/abs/1608.08434

POI: Multiple Object Tracking with High Performance Detection and Appearance Feature

- intro: ECCV workshop BMTT 2016. Sensetime

- keywords: KDNT

- arxiv: https://arxiv.org/abs/1610.06136

Multiple Object Tracking: A Literature Review

- intro: last revised 22 May 2017 (this version, v4)

- arxiv: https://arxiv.org/abs/1409.7618

Deep Network Flow for Multi-Object Tracking

- intro: CVPR 2017

- arxiv: https://arxiv.org/abs/1706.08482

Online Multi-Object Tracking Using CNN-based Single Object Tracker with Spatial-Temporal Attention Mechanism

https://arxiv.org/abs/1708.02843

Recurrent Autoregressive Networks for Online Multi-Object Tracking

https://arxiv.org/abs/1711.02741

SOT for MOT

- intro: Tsinghua University & Megvii Inc. (Face++)

- arxiv: https://arxiv.org/abs/1712.01059

Multi-Target, Multi-Camera Tracking by Hierarchical Clustering: Recent Progress on DukeMTMC Project

https://arxiv.org/abs/1712.09531

Multiple Target Tracking by Learning Feature Representation and Distance Metric Jointly

https://arxiv.org/abs/1802.03252

Tracking Noisy Targets: A Review of Recent Object Tracking Approaches

https://arxiv.org/abs/1802.03098

Machine Learning Methods for Solving Assignment Problems in Multi-Target Tracking

- intro: University of Florida

- arxiv: https://arxiv.org/abs/1802.06897

Learning to Detect and Track Visible and Occluded Body Joints in a Virtual World

- intro: University of Modena and Reggio Emilia

- arxiv: https://arxiv.org/abs/1803.08319

Features for Multi-Target Multi-Camera Tracking and Re-Identification

- intro: CVPR 2018 spotlight

- intro: https://arxiv.org/abs/1803.10859

High Performance Visual Tracking with Siamese Region Proposal Network

- intro: CVPR 2018 spotlight

- keywords: SiamRPN

- paper: http://openaccess.thecvf.com/content_cvpr_2018/papers/Li_High_Performance_Visual_CVPR_2018_paper.pdf

- slides: https://drive.google.com/file/d/1OGIOUqANvYfZjRoQfpiDqhPQtOvPCpdq/view

Trajectory Factory: Tracklet Cleaving and Re-connection by Deep Siamese Bi-GRU for Multiple Object Tracking

- intro: Peking University

- arxiv: https://arxiv.org/abs/1804.04555

Automatic Adaptation of Person Association for Multiview Tracking in Group Activities

- intro: Carnegie Mellon University & Argo AI & Adobe Research

- project page: http://www.cs.cmu.edu/~ILIM/projects/IM/Association4Tracking/

- arxiv: https://arxiv.org/abs/1805.08717

Improving Online Multiple Object tracking with Deep Metric Learning

https://arxiv.org/abs/1806.07592

Tracklet Association Tracker: An End-to-End Learning-based Association Approach for Multi-Object Tracking

- intro: Tsinghua Univeristy & Horizon Robotics

- arxiv: https://arxiv.org/abs/1808.01562

Multiple Object Tracking in Urban Traffic Scenes with a Multiclass Object Detector

- intro: 13th International Symposium on Visual Computing (ISVC)

- arxiv: https://arxiv.org/abs/1809.02073

Tracking by Animation: Unsupervised Learning of Multi-Object Attentive Trackers

https://arxiv.org/abs/1809.03137

Deep Affinity Network for Multiple Object Tracking

- intro: IEEE TPAMI 2018

- arxiv: https://arxiv.org/abs/1810.11780

- github: https://github.com/shijieS/SST

Exploit the Connectivity: Multi-Object Tracking with TrackletNet

https://arxiv.org/abs/1811.07258

Multi-Object Tracking with Multiple Cues and Switcher-Aware Classification

- intro: Sensetime Group Limited & Beihang University & The University of Sydney

- arxiv: https://arxiv.org/abs/1901.06129

Online Multi-Object Tracking with Dual Matching Attention Networks

- intro: ECCV 2018

- arxiv: https://arxiv.org/abs/1902.00749

Online Multi-Object Tracking with Instance-Aware Tracker and Dynamic Model Refreshment

https://arxiv.org/abs/1902.08231

Tracking without bells and whistles

- intro: Technical University of Munich

- keywords: Tracktor

- arxiv: https://arxiv.org/abs/1903.05625

- github: https://github.com/phil-bergmann/tracking_wo_bnw

Spatial-Temporal Relation Networks for Multi-Object Tracking

- intro: Hong Kong University of Science and Technology & Tsinghua University & MSRA

- arxiv: https://arxiv.org/abs/1904.11489

Fooling Detection Alone is Not Enough: First Adversarial Attack against Multiple Object Tracking

- intro: Baidu X-Lab & UC Irvine

- arxiv: https://arxiv.org/abs/1905.11026

State-aware Re-identification Feature for Multi-target Multi-camera Tracking

- intro: CVPR-2019 TRMTMCT Workshop

- intro: BUPT & Chinese Academy of Sciences & Horizon Robotics

- arxiv: https://arxiv.org/abs/1906.01357

DeepMOT: A Differentiable Framework for Training Multiple Object Trackers

- intro: Inria

- keywords: deep Hungarian network (DHN)

- arxiv: https://arxiv.org/abs/1906.06618

- gitlab: https://gitlab.inria.fr/yixu/deepmot

Graph Neural Based End-to-end Data Association Framework for Online Multiple-Object Tracking

- intro: Beihang University && Inception Institute of Artificial Intelligence

- arxiv: https://arxiv.org/abs/1907.05315

End-to-End Learning Deep CRF models for Multi-Object Tracking

https://arxiv.org/abs/1907.12176

End-to-end Recurrent Multi-Object Tracking and Trajectory Prediction with Relational Reasoning

- intro: University of Oxford

- arxiv: https://arxiv.org/abs/1907.12887

Robust Multi-Modality Multi-Object Tracking

- intro: ICCV 2019

- keywords: LiDAR

- arxiv: https://arxiv.org/abs/1909.03850

- github: https://github.com/ZwwWayne/mmMOT

Learning Multi-Object Tracking and Segmentation from Automatic Annotations

https://arxiv.org/abs/1912.02096

Learning a Neural Solver for Multiple Object Tracking

- intro: Technical University of Munich

- keywords: Message Passing Networks (MPNs)

- arxiv: https://arxiv.org/abs/1912.07515

- github: https://github.com/dvl-tum/mot_neural_solver

Multi-object Tracking via End-to-end Tracklet Searching and Ranking

- intro: Horizon Robotics Inc

- arxiv: https://arxiv.org/abs/2003.02795

Refinements in Motion and Appearance for Online Multi-Object Tracking

https://arxiv.org/abs/2003.07177

A Unified Object Motion and Affinity Model for Online Multi-Object Tracking

- intro: CVPR 2020

- arxiv: https://arxiv.org/abs/2003.11291

- github: https://github.com/yinjunbo/UMA-MOT

A Simple Baseline for Multi-Object Tracking

- intro: Microsoft Research Asia

- arxiv: https://arxiv.org/abs/2004.01888

- github: https://github.com/ifzhang/FairMOT

MOPT: Multi-Object Panoptic Tracking

- intro: University of Freiburg

- arxiv: https://arxiv.org/abs/2004.08189

SQE: a Self Quality Evaluation Metric for Parameters Optimization in Multi-Object Tracking

- intro: Tsinghua University & Megvii Inc

- arxiv: https://arxiv.org/abs/2004.07472

Multi-Object Tracking with Siamese Track-RCNN

- intro: Amazon Web Service (AWS) Rekognition

- arixv: https://arxiv.org/abs/2004.07786

TubeTK: Adopting Tubes to Track Multi-Object in a One-Step Training Model

- intro: CVPR 2020 oral

- arxiv: https://arxiv.org/abs/2006.05683

- github: https://github.com/BoPang1996/TubeTK

Quasi-Dense Similarity Learning for Multiple Object Tracking

- intro: CVPR 2021 oral

- intro: Zhejiang University & Georgia Institute of Technology & ETH Zürich & Stanford University & UC Berkeley

- project page: https://www.vis.xyz/pub/qdtrack/

- arxiv: https://arxiv.org/abs/2006.06664

- github: https://github.com/SysCV/qdtrack

imultaneous Detection and Tracking with Motion Modelling for Multiple Object Tracking

- intro: ECCV 2020

- arxiv: https://arxiv.org/abs/2008.08826

- github: https://github.com/shijieS/DMMN

MAT: Motion-Aware Multi-Object Tracking

https://arxiv.org/abs/2009.04794

SAMOT: Switcher-Aware Multi-Object Tracking and Still Another MOT Measure

https://arxiv.org/abs/2009.10338

GCNNMatch: Graph Convolutional Neural Networks for Multi-Object Tracking via Sinkhorn Normalization

- intro: Virginia Tech

- arxiv: https://arxiv.org/abs/2010.00067

Rethinking the competition between detection and ReID in Multi-Object Tracking

- intro: University of Electronic Science and Technology of China(UESTC) & Chinese Academy of Sciences

- arxiv: https://arxiv.org/abs/2010.12138

GMOT-40: A Benchmark for Generic Multiple Object Tracking

- intro: Temple University & Stony Brook University & Microsoft

- arxiv: https://arxiv.org/abs/2011.11858

Multi-object Tracking with a Hierarchical Single-branch Network

https://arxiv.org/abs/2101.01984

Discriminative Appearance Modeling with Multi-track Pooling for Real-time Multi-object Tracking

- intro: Georgia Institute of Technology & Oregon State University

- arxiv: https://arxiv.org/abs/2101.12159

Learning a Proposal Classifier for Multiple Object Tracking

- intro: CVPR 2021 poster

- arxiv: https://arxiv.org/abs/2103.07889

- github: https://github.com/daip13/LPC_MOT

Track to Detect and Segment: An Online Multi-Object Tracker

- intro: CVPR 2021

- intro: SUNY Buffalo & TJU & Horizon Robotics

- project page: https://jialianwu.com/projects/TraDeS.html

- arxiv: https://arxiv.org/abs/2103.08808

Learnable Graph Matching: Incorporating Graph Partitioning with Deep Feature Learning for Multiple Object Tracking

- intro: CVPR 2021

- arxiv: https://arxiv.org/abs/2103.16178

- github: https://github.com/jiaweihe1996/GMTracker

Multiple Object Tracking with Correlation Learning

- intro: CVPR 2021

- intro: Machine Intelligence Technology Lab, Alibaba Group

- arxiv: https://arxiv.org/abs/2104.03541

ByteTrack: Multi-Object Tracking by Associating Every Detection Box

- intro: Huazhong University of Science and Technology & The University of Hong Kong & ByteDance

- arxiv: https://arxiv.org/abs/2110.06864

- github: https://github.com/ifzhang/ByteTrack

SiamMOT: Siamese Multi-Object Tracking

- intro: Amazon Web Services (AWS)

- arxiv: https://arxiv.org/abs/2105.11595

- github: https://github.com/amazon-research/siam-mot

Synthetic Data Are as Good as the Real for Association Knowledge Learning in Multi-object Tracking

- arxiv: https://arxiv.org/abs/2106.16100

- github: https://github.com/liuyvchi/MOTX

Track to Detect and Segment: An Online Multi-Object Tracker

- intro: CVPR 2021

- intro: SUNY Buffalo & TJU & Horizon Robotics

- project page: https://jialianwu.com/projects/TraDeS.html

- arxiv: https://arxiv.org/abs/2103.08808

- github: https://github.com/JialianW/TraDeS

Learning of Global Objective for Network Flow in Multi-Object Tracking

- intro: CVPR 2022

- intro: Rochester Institute of Technology & Monash University

- arxiv: https://arxiv.org/abs/2203.16210

MeMOT: Multi-Object Tracking with Memory

- intro: CVPR 2022 Oral

- arxiv: https://arxiv.org/abs/2203.16761

TR-MOT: Multi-Object Tracking by Reference

- intro: University of Washington & Beihang University & SenseTime Research

- arxiv: https://arxiv.org/abs/2203.16621

Towards Grand Unification of Object Tracking

- intro: ECCV 2022 Oral

- intro: Dalian University of Technology & ByteDance & The University of Hong Kong

- arxiv: https://arxiv.org/abs/2207.07078

- github: https://github.com/MasterBin-IIAU/Unicorn

Tracking Every Thing in the Wild

- intro: ECCV 2022

- intro: Computer Vision Lab, ETH Zürich

- project page: https://www.vis.xyz/pub/tet/

- arxiv: https://arxiv.org/abs/2207.12978

- github: https://github.com/SysCV/tet

Transformer

TransTrack: Multiple-Object Tracking with Transformer

- intro: The University of Hong Kong & ByteDance AI Lab & Tongji University & Carnegie Mellon University & Nanyang Technological University

- arxiv: https://arxiv.org/abs/2012.15460

- github: https://github.com/PeizeSun/TransTrack

TrackFormer: Multi-Object Tracking with Transformers

- intro: Technical University of Munich & Facebook AI Research (FAIR)

- arxiv: https://arxiv.org/abs/2101.02702

TransCenter: Transformers with Dense Queries for Multiple-Object Tracking

- intro: Inria & MIT & MIT-IBM Watson AI Lab

- arxiv: https://arxiv.org/abs/2103.15145

Looking Beyond Two Frames: End-to-End Multi-Object Tracking UsingSpatial and Temporal Transformers

- intro: Monash University & The University of Adelaide & Australian Centre for Robotic Vision

- arixiv: https://arxiv.org/abs/2103.14829

TransMOT: Spatial-Temporal Graph Transformer for Multiple Object Tracking

- intro: Microsoft & StonyBrook University

- arxiv: https://arxiv.org/abs/2104.00194

MOTR: End-to-End Multiple-Object Tracking with TRansformer

- intro: MEGVII Technology

- arxiv: https://arxiv.org/abs/2105.03247

- github: https://github.com/megvii-model/MOTR

Global Tracking Transformers

- intro: CVPR 2022

- intro: The University of Texas at Austin & Apple

- arxiv: https://arxiv.org/abs/2203.13250

- github: https://github.com/xingyizhou/GTR

Multiple People Tracking

Multi-Person Tracking by Multicut and Deep Matching

- intro: Max Planck Institute for Informatics

- arxiv: http://arxiv.org/abs/1608.05404

Joint Flow: Temporal Flow Fields for Multi Person Tracking

- intro: University of Bonn

- arxiv: https://arxiv.org/abs/1805.04596

Multiple People Tracking by Lifted Multicut and Person Re-identification

- intro: CVPR 2017

- intro: Max Planck Institute for Informatics & Max Planck Institute for Intelligent Systems

- paper: http://openaccess.thecvf.com/content_cvpr_2017/papers/Tang_Multiple_People_Tracking_CVPR_2017_paper.pdf

- code: https://www.mpi-inf.mpg.de/departments/computer-vision-and-multimodal-computing/research/people-detection-pose-estimation-and-tracking/multiple-people-tracking-with-lifted-multicut-and-person-re-identification/

Tracking by Prediction: A Deep Generative Model for Mutli-Person localisation and Tracking

- intro: WACV 2018

- intro: Queensland University of Technology (QUT)

- arxiv: https://arxiv.org/abs/1803.03347

Real-time Multiple People Tracking with Deeply Learned Candidate Selection and Person Re-Identification

- intro: ICME 2018

- arxiv: https://arxiv.org/abs/1809.04427

- github: https://github.com/longcw/MOTDT

Deep Person Re-identification for Probabilistic Data Association in Multiple Pedestrian Tracking

https://arxiv.org/abs/1810.08565

Multiple People Tracking Using Hierarchical Deep Tracklet Re-identification

https://arxiv.org/abs/1811.04091

Multi-person Articulated Tracking with Spatial and Temporal Embeddings

- intro: CVPR 2019

- intro: SenseTime Research & The University of Sydney & SenseTime Computer Vision Research Group

- arxiv: https://arxiv.org/abs/1903.09214

Instance-Aware Representation Learning and Association for Online Multi-Person Tracking

- intro: Pattern Recognition

- intro: Sun Yat-sen University & Guangdong University of Foreign Studies & Carnegie Mellon University & University of California & Guilin University of Electronic Technology & WINNER Technology

- arxiv: https://arxiv.org/abs/1905.12409

Online Multiple Pedestrian Tracking using Deep Temporal Appearance Matching Association

- intro: 2nd ranked tracker of the MOTChallenge on CVPR19 workshop

- arxiv: https://arxiv.org/abs/1907.00831

Detecting Invisible People

- intro: Carnegie Mellon University & Argo AI

- project page: http://www.cs.cmu.edu/~tkhurana/invisible.htm

- arxiv: https://arxiv.org/abs/2012.08419

MOTS

MOTS: Multi-Object Tracking and Segmentation

- intro: CVPR 2019

- intro: RWTH Aachen University

- keywords: TrackR-CNN

- project page: https://www.vision.rwth-aachen.de/page/mots

- arxiv: https://arxiv.org/abs/1902.03604

- github(official): https://github.com/VisualComputingInstitute/TrackR-CNN

Segment as Points for Efficient Online Multi-Object Tracking and Segmentation

- intro: ECCV 2020 oral

- intro: PointTrack

- arxiv: https://arxiv.org/abs/2007.01550

- github: https://github.com/detectRecog/PointTrack

PointTrack++ for Effective Online Multi-Object Tracking and Segmentation

- intro: CVPR2020 MOTS Challenge Winner. PointTrack++ ranks first on KITTI MOTS

- arxiv: https://arxiv.org/abs/2007.01549

Prototypical Cross-Attention Networks for Multiple Object Tracking and Segmentation

- intro: NeurIPS 2021 Spotlight

- intro: ETH Zürich & HKUST & Kuaishou Technology

- keywords: Prototypical Cross-Attention Networks (PCAN)

- project page: https://www.vis.xyz/pub/pcan/

- arxiv: https://arxiv.org/abs/2106.11958

- github: https://github.com/SysCV/pcan

- youtube: https://www.youtube.com/watch?v=hhAC2H0fmP8

- bilibili: https://www.bilibili.com/video/av593811548

- zhihu: https://zhuanlan.zhihu.com/p/445457150

Multi-Object Tracking and Segmentation with a Space-Time Memory Network

- intro: Polytechnique Montreal

- project page: http://www.mehdimiah.com/mentos+

- arxiv: https://arxiv.org/abs/2110.11284

Multi-target multi-camera tracking (MTMCT)

Traffic-Aware Multi-Camera Tracking of Vehicles Based on ReID and Camera Link Model

- intro: ACMMM 2020

- arxiv: https://arxiv.org/abs/2008.09785

3D MOT

A Baseline for 3D Multi-Object Tracking

Probabilistic 3D Multi-Object Tracking for Autonomous Driving

- intro: NeurIPS 2019

- intro: 1st Place Award, NuScenes Tracking Challenge

- intro: Stanford University $ Toyota Research Institute

- arxiv: https://arxiv.org/abs/2001.05673

- github: https://github.com/eddyhkchiu/mahalanobis_3d_multi_object_tracking

JRMOT: A Real-Time 3D Multi-Object Tracker and a New Large-Scale Dataset

- intro: Stanford University

- arxiv: https://arxiv.org/abs/2002.08397

- github: https://github.com/StanfordVL/JRMOT_ROS

Real-time 3D Deep Multi-Camera Tracking

- intro: Microsoft Cloud & AI

- arxiv: https://arxiv.org/abs/2003.11753

P2B: Point-to-Box Network for 3D Object Tracking in Point Clouds

- intro: CVPR 2020 oral

- intro: Huazhong University of Science and Technology

- arxiv: https://arxiv.org/abs/2005.13888

- github: https://github.com/HaozheQi/P2B

PnPNet: End-to-End Perception and Prediction with Tracking in the Loop

- intro: CVPR 2020

- intro: Uber Advanced Technologies Group & University of Toronto

- arxiv: https://arxiv.org/abs/2005.14711

GNN3DMOT: Graph Neural Network for 3D Multi-Object Tracking with Multi-Feature Learning

- intro: CVPR 2020

- intro: Carnegie Mellon University

- arxiv: https://arxiv.org/abs/2006.07327

- github(official, PyTorch): https://github.com/xinshuoweng/GNN3DMOT

1st Place Solutions for Waymo Open Dataset Challenges – 2D and 3D Tracking

- intro: Horizon Robotics Inc.

- arxiv: https://arxiv.org/abs/2006.15506

Graph Neural Networks for 3D Multi-Object Tracking

- intro: ECCV 2020 workshop

- intro: Robotics Institute, Carnegie Mellon University

- project page: http://www.xinshuoweng.com/projects/GNN3DMOT/

- arxiv: https://arxiv.org/abs/2008.09506

- github: https://github.com/xinshuoweng/GNN3DMOT

Learnable Online Graph Representations for 3D Multi-Object Tracking

https://arxiv.org/abs/2104.11747

SimpleTrack: Understanding and Rethinking 3D Multi-object Tracking

- intro: UIUC & TuSimple

- arxiv: https://arxiv.org/abs/2111.09621

- github: https://github.com/TuSimple/SimpleTrack

Immortal Tracker: Tracklet Never Dies

- intro: University of Chinese Academy of Sciences & Tusimple & CASIA & UIUC

- arxiv: https://arxiv.org/abs/2111.13672

- github: https://github.com/ImmortalTracker/ImmortalTracker

Single Stage Joint Detection and Tracking

Bridging the Gap Between Detection and Tracking: A Unified Approach

- intro: ICCV 2019

- paper: https://openaccess.thecvf.com/content_ICCV_2019/papers/Huang_Bridging_the_Gap_Between_Detection_and_Tracking_A_Unified_Approach_ICCV_2019_paper.pdf

Towards Real-Time Multi-Object Tracking

- intro: Tsinghua University & Austrilian National University

- arxiv: https://arxiv.org/abs/1909.12605

- github: https://github.com/Zhongdao/Towards-Realtime-MOT

RetinaTrack: Online Single Stage Joint Detection and Tracking

- intro: CVPR 2020

- intro: Google

- arxiv: https://arxiv.org/abs/2003.13870

Tracking Objects as Points

- intro: UT Austin & Intel Labs

- intro: Simultaneous object detection and tracking using center points.

- keywords: CenterTrack

- arxiv: https://arxiv.org/abs/2004.01177

- github: https://github.com/xingyizhou/CenterTrack

Fully Convolutional Online Tracking

- intro: Nanjing University

- arxiv: https://arxiv.org/abs/2004.07109

- github(official, PyTorch): https://github.com/MCG-NJU/FCOT

Accurate Anchor Free Tracking

- intro: Tongji University & UCLA

- keywords: Anchor Free Siamese Network (AFSN)

- arxiv: https://arxiv.org/abs/2006.07560

Ocean: Object-aware Anchor-free Tracking

- intro: ECCV 2020

- intro: NLPR, CASIA & UCAS & Microsoft Research

- arxiv: https://arxiv.org/abs/2006.10721

- github: https://github.com/researchmm/TracKit

Joint Detection and Multi-Object Tracking with Graph Neural Networks

- intro: Carnegie Mellon University

- arxiv: https://arxiv.org/abs/2006.13164

Joint Multiple-Object Detection and Tracking

Chained-Tracker: Chaining Paired Attentive Regression Results for End-to-End Joint Multiple-Object Detection and Tracking

- intro: ECCV 2020 spotlight

- intro: Tencent Youtu Lab & Fudan University & Nara Institute of Science and Technology

- keywords: Chained-Tracker (CTracker)

- arxiv: https://arxiv.org/abs/2007.14557

- github: https://github.com/pjl1995/CTracker

SMOT: Single-Shot Multi Object Tracking

https://arxiv.org/abs/2010.16031

DEFT: Detection Embeddings for Tracking

Global Correlation Network: End-to-End Joint Multi-Object Detection and Tracking

- intro: ICCV 2021

- intro: Intell Tsinghua University & Beihang University

- arxiv: https://arxiv.org/abs/2103.12511

Tracking with Reinforcement Learning

Deep Reinforcement Learning for Visual Object Tracking in Videos

- intro: University of California at Santa Barbara & Samsung Research America

- arxiv: https://arxiv.org/abs/1701.08936

Visual Tracking by Reinforced Decision Making

End-to-end Active Object Tracking via Reinforcement Learning

https://arxiv.org/abs/1705.10561

Action-Decision Networks for Visual Tracking with Deep Reinforcement Learning

- project page: https://sites.google.com/view/cvpr2017-adnet

- paper: https://drive.google.com/file/d/0B34VXh5mZ22cZUs2Umc1cjlBMFU/view?usp=drive_web

Tracking as Online Decision-Making: Learning a Policy from Streaming Videos with Reinforcement Learning

https://arxiv.org/abs/1707.04991

Detect to Track and Track to Detect

- intro: ICCV 2017

- project page: https://www.robots.ox.ac.uk/~vgg/research/detect-track/

- arxiv: https://arxiv.org/abs/1710.03958

- github: https://github.com/feichtenhofer/Detect-Track

Projects

MMTracking

- intro: OpenMMLab Video Perception Toolbox. It supports Single Object Tracking (SOT), Multiple Object Tracking (MOT), Video Object Detection (VID) with a unified framework.

- github: https://github.com/open-mmlab/mmtracking

Tensorflow_Object_Tracking_Video

- intro: Object Tracking in Tensorflow ( Localization Detection Classification ) developed to partecipate to ImageNET VID competition

- github: https://github.com/DrewNF/Tensorflow_Object_Tracking_Video

Resources

Multi-Object-Tracking-Paper-List

- intro: Paper list and source code for multi-object-tracking

- github: https://github.com/SpyderXu/multi-object-tracking-paper-list