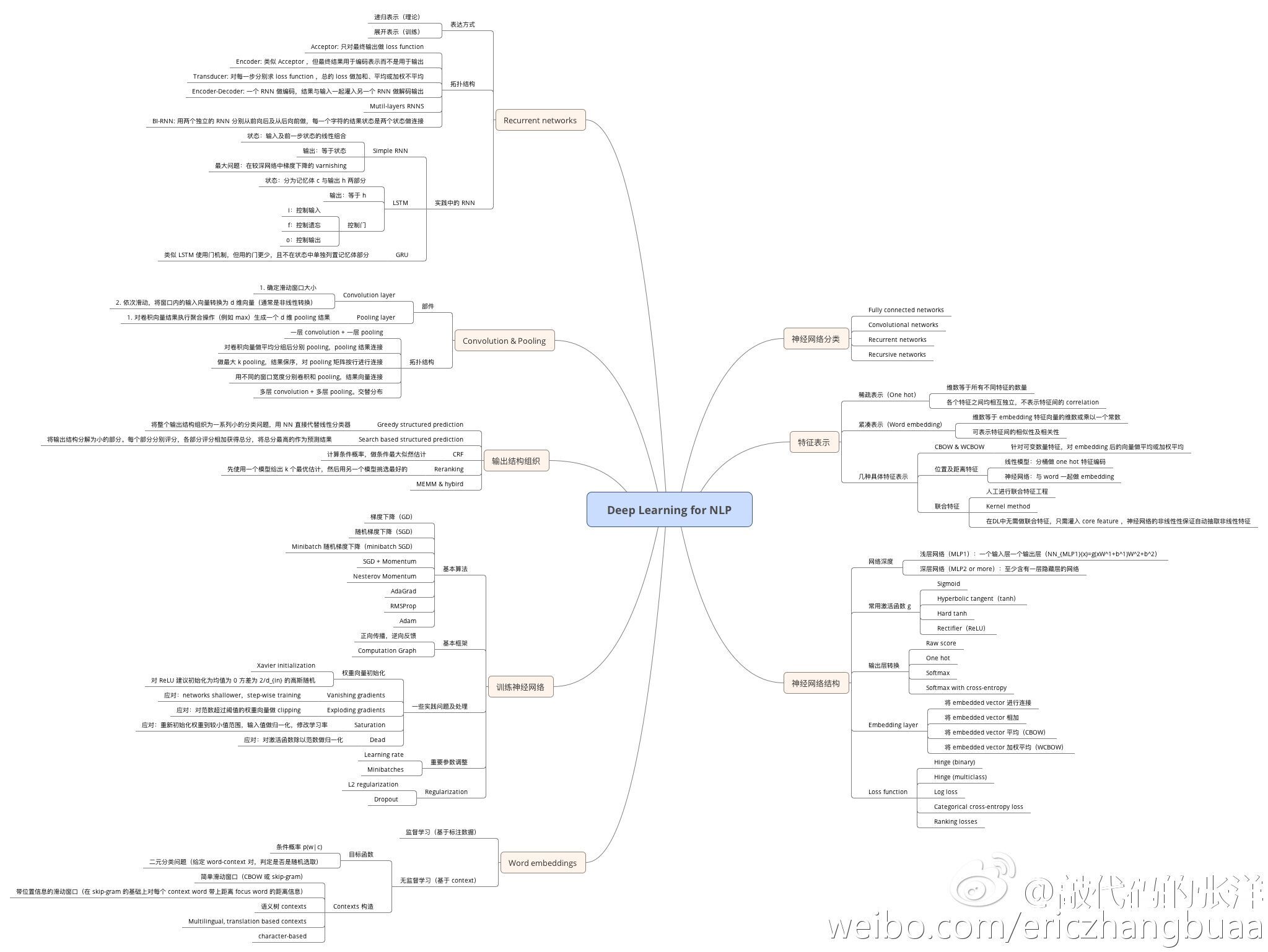

Natural Language Processing

Tutorials

Practical Neural Networks for NLP

- intro: EMNLP 2016

- github: https://github.com/clab/dynet_tutorial_examples

Structured Neural Networks for NLP: From Idea to Code

- slides: https://github.com/neubig/yrsnlp-2016/blob/master/neubig16yrsnlp.pdf

- github: https://github.com/neubig/yrsnlp-2016

Understanding Deep Learning Models in NLP

http://nlp.yvespeirsman.be/blog/understanding-deeplearning-models-nlp/

Deep learning for natural language processing, Part 1

https://softwaremill.com/deep-learning-for-nlp/

Neural Models

Unifying Visual-Semantic Embeddings with Multimodal Neural Language Models

- intro: NIPS 2014 deep learning workshop

- arxiv: http://arxiv.org/abs/1411.2539

- github: https://github.com/ryankiros/visual-semantic-embedding

- results: http://www.cs.toronto.edu/~rkiros/lstm_scnlm.html

- demo: http://deeplearning.cs.toronto.edu/i2t

Improved Semantic Representations From Tree-Structured Long Short-Term Memory Networks

- arxiv: http://arxiv.org/abs/1503.00075

- github: https://github.com/stanfordnlp/treelstm

- github(Theano): https://github.com/ofirnachum/tree_rnn

Visualizing and Understanding Neural Models in NLP

- arxiv: http://arxiv.org/abs/1506.01066

- github: https://github.com/jiweil/Visualizing-and-Understanding-Neural-Models-in-NLP

Character-Aware Neural Language Models

Skip-Thought Vectors

A Primer on Neural Network Models for Natural Language Processing

Character-aware Neural Language Models

Neural Variational Inference for Text Processing

- arxiv: http://arxiv.org/abs/1511.06038

- notes: http://dustintran.com/blog/neural-variational-inference-for-text-processing/

- github: https://github.com/carpedm20/variational-text-tensorflow

- github: https://github.com/cheng6076/NVDM

Sequence to Sequence Learning

Generating Text with Deep Reinforcement Learning

- intro: NIPS 2015

- arxiv: http://arxiv.org/abs/1510.09202

MUSIO: A Deep Learning based Chatbot Getting Smarter

- homepage: http://ec2-204-236-149-143.us-west-1.compute.amazonaws.com:9000/

- github(Torch7): https://github.com/deepcoord/seq2seq

Translation

Learning phrase representations using rnn encoder-decoder for statistical machine translation

- intro: GRU. EMNLP 2014

- arxiv: http://arxiv.org/abs/1406.1078

Neural Machine Translation by Jointly Learning to Align and Translate

- intro: ICLR 2015

- arxiv: http://arxiv.org/abs/1409.0473

- github: https://github.com/lisa-groundhog/GroundHog

Multi-Source Neural Translation

- intro: “report up to +4.8 Bleu increases on top of a very strong attention-based neural translation model.”

- arxiv: Multi-Source Neural Translation

- github(Zoph_RNN): https://github.com/isi-nlp/Zoph_RNN

- video: http://research.microsoft.com/apps/video/default.aspx?id=260336

Multi-Way, Multilingual Neural Machine Translation with a Shared Attention Mechanism

- arxiv: http://arxiv.org/abs/1601.01073

- github: https://github.com/nyu-dl/dl4mt-multi

- notes: https://github.com/dennybritz/deeplearning-papernotes/blob/master/notes/multi-way-nmt-shared-attention.md

Modeling Coverage for Neural Machine Translation

A Character-level Decoder without Explicit Segmentation for Neural Machine Translation

NEMATUS: Attention-based encoder-decoder model for neural machine translation

Variational Neural Machine Translation

- intro: EMNLP 2016

- arxiv: https://arxiv.org/abs/1605.07869

- github: https://github.com/DeepLearnXMU/VNMT

Neural Network Translation Models for Grammatical Error Correction

Linguistic Input Features Improve Neural Machine Translation

Sequence-Level Knowledge Distillation

- intro: EMNLP 2016

- arxiv: http://arxiv.org/abs/1606.07947

- github: https://github.com/harvardnlp/nmt-android

Neural Machine Translation: Breaking the Performance Plateau

Tips on Building Neural Machine Translation Systems

Semi-Supervised Learning for Neural Machine Translation

- intro: ACL 2016. Tsinghua University & Baidu Inc

- arxiv: http://arxiv.org/abs/1606.04596

EUREKA-MangoNMT: A C++ toolkit for neural machine translation for CPU

Deep Character-Level Neural Machine Translation

Neural Machine Translation Implementations

Google’s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation

Learning to Translate in Real-time with Neural Machine Translation

Is Neural Machine Translation Ready for Deployment? A Case Study on 30 Translation Directions

Fully Character-Level Neural Machine Translation without Explicit Segmentation

Navigational Instruction Generation as Inverse Reinforcement Learning with Neural Machine Translation

Neural Machine Translation in Linear Time

- intro: ByteNet

- arxiv: https://arxiv.org/abs/1610.10099

- github: https://github.com/paarthneekhara/byteNet-tensorflow

- github(Tensorflow): https://github.com/buriburisuri/ByteNet

Neural Machine Translation with Reconstruction

A Convolutional Encoder Model for Neural Machine Translation

- intro: ACL 2017. Facebook AI Research

- arxiv: https://arxiv.org/abs/1611.02344

- github: https://github.com//pravarmahajan/cnn-encoder-nmt

Toward Multilingual Neural Machine Translation with Universal Encoder and Decoder

MXNMT: MXNet based Neural Machine Translation

Doubly-Attentive Decoder for Multi-modal Neural Machine Translation

- intro: Dublin City University & Trinity College Dublin

- arxiv: https://arxiv.org/abs/1702.01287

Massive Exploration of Neural Machine Translation Architectures

- intro: Google Brain

- arxiv: https://arxiv.org/abs/1703.03906

- github: https://github.com/google/seq2seq/

Depthwise Separable Convolutions for Neural Machine Translation

- intro: Google Brain & University of Toronto

- arxiv: https://arxiv.org/abs/1706.03059

Deep Architectures for Neural Machine Translation

- intro: WMT 2017 research track. University of Edinburgh & Charles University

- arxiv: https://arxiv.org/abs/1707.07631

- github: https://github.com/Avmb/deep-nmt-architectures

Marian: Fast Neural Machine Translation in C++

- intro: Microsoft & Adam Mickiewicz University in Poznan & University of Edinburgh

- homepage: https://marian-nmt.github.io/

- arxiv: https://arxiv.org/abs/1804.00344

- github: https://github.com/marian-nmt/marian

Sockeye

- intro: Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

- arxiv: https://github.com/awslabs/sockeye/

Summarization

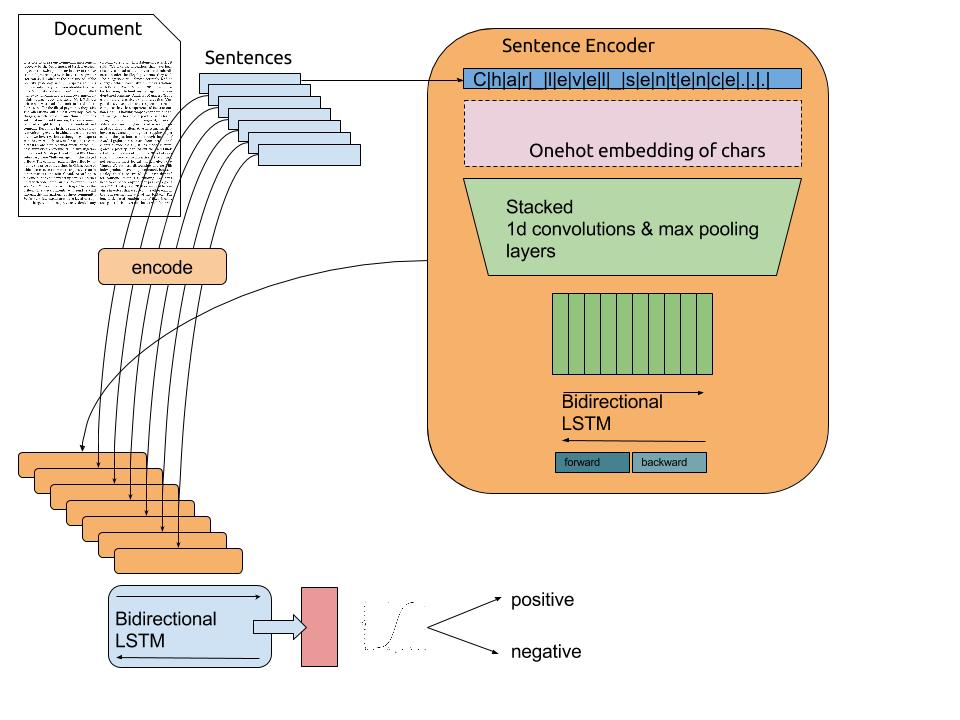

Extraction of Salient Sentences from Labelled Documents

- arxiv: http://arxiv.org/abs/1412.6815

- github: https://github.com/mdenil/txtnets

- notes: https://github.com/jxieeducation/DIY-Data-Science/blob/master/papernotes/2014/06/model-visualizing-summarising-conv-net.md

A Neural Attention Model for Abstractive Sentence Summarization

- intro: EMNLP 2015. Facebook AI Research

- arxiv: http://arxiv.org/abs/1509.00685

- github: https://github.com/facebook/NAMAS

- github(TensorFlow): https://github.com/carpedm20/neural-summary-tensorflow

A Convolutional Attention Network for Extreme Summarization of Source Code

- homepage: http://groups.inf.ed.ac.uk/cup/codeattention/

- arxiv: http://arxiv.org/abs/1602.03001

- github: https://github.com/jxieeducation/DIY-Data-Science/blob/master/papernotes/2016/02/conv-attention-network-source-code-summarization.md

Abstractive Text Summarization Using Sequence-to-Sequence RNNs and Beyond

- intro: BM Watson & Université de Montréal

- arxiv: http://arxiv.org/abs/1602.06023

textsum: Text summarization with TensorFlow

- blog: https://research.googleblog.com/2016/08/text-summarization-with-tensorflow.html

- github: https://github.com/tensorflow/models/tree/master/textsum

How to Run Text Summarization with TensorFlow

- blog: https://medium.com/@surmenok/how-to-run-text-summarization-with-tensorflow-d4472587602d#.mll1rqgjg

- github: https://github.com/surmenok/TextSum

Reading Comprehension

Text Comprehension with the Attention Sum Reader Network

Text Understanding with the Attention Sum Reader Network

- intro: ACL 2016

- arxiv: https://arxiv.org/abs/1603.01547

- github: https://github.com/rkadlec/asreader

A Thorough Examination of the CNN/Daily Mail Reading Comprehension Task

Consensus Attention-based Neural Networks for Chinese Reading Comprehension

- arxiv: http://arxiv.org/abs/1607.02250

- dataset(“HFL-RC”): http://hfl.iflytek.com/chinese-rc/

Separating Answers from Queries for Neural Reading Comprehension

Attention-over-Attention Neural Networks for Reading Comprehension

Teaching Machines to Read and Comprehend CNN News and Children Books using Torch

Reasoning with Memory Augmented Neural Networks for Language Comprehension

Bidirectional Attention Flow: Bidirectional Attention Flow for Machine Comprehension

- project page: https://allenai.github.io/bi-att-flow/

- github: https://github.com/allenai/bi-att-flow

NewsQA: A Machine Comprehension Dataset

- arxiv: https://arxiv.org/abs/1611.09830

- dataset: http://datasets.maluuba.com/NewsQA

- github: https://github.com/Maluuba/newsqa

Gated-Attention Readers for Text Comprehension

- intro: CMU

- arxiv: https://arxiv.org/abs/1606.01549

- github: https://github.com/bdhingra/ga-reader

Get To The Point: Summarization with Pointer-Generator Networks

- intro: ACL 2017. Stanford University & Google Brain

- arxiv: https://arxiv.org/abs/1704.04368

- github: https://github.com/abisee/pointer-generator

Language Understanding

Recurrent Neural Networks with External Memory for Language Understanding

- arxiv: http://arxiv.org/abs/1506.00195

- github: https://github.com/npow/RNN-EM

Neural Semantic Encoders

- intro: EACL 2017

- arxiv: https://arxiv.org/abs/1607.04315

- github(Keras): https://github.com/pdasigi/neural-semantic-encoders

Neural Tree Indexers for Text Understanding

- arxiv: https://arxiv.org/abs/1607.04492

- bitbucket: https://bitbucket.org/tsendeemts/nti/src

Better Text Understanding Through Image-To-Text Transfer

- intro: Google Brain & Technische Universität München

- arxiv: https://arxiv.org/abs/1705.08386

Text Classification

Convolutional Neural Networks for Sentence Classification

- intro: EMNLP 2014

- arxiv: http://arxiv.org/abs/1408.5882

- github(Theano): https://github.com/yoonkim/CNN_sentence

- github(Torch): https://github.com/harvardnlp/sent-conv-torch

- github(Keras): https://github.com/alexander-rakhlin/CNN-for-Sentence-Classification-in-Keras

- github(Tensorflow): https://github.com/abhaikollara/CNN-Sentence-Classification

Recurrent Convolutional Neural Networks for Text Classification

- paper: http://www.aaai.org/ocs/index.php/AAAI/AAAI15/paper/view/9745/9552

- github: https://github.com/knok/rcnn-text-classification

Character-level Convolutional Networks for Text Classification

- intro: NIPS 2015. “Text Understanding from Scratch”

- arxiv: http://arxiv.org/abs/1509.01626

- github: https://github.com/zhangxiangxiao/Crepe

- datasets: http://goo.gl/JyCnZq

- github(TensorFlow): https://github.com/mhjabreel/CharCNN

A C-LSTM Neural Network for Text Classification

Rationale-Augmented Convolutional Neural Networks for Text Classification

Text classification using DIGITS and Torch7

Recurrent Neural Network for Text Classification with Multi-Task Learning

Deep Multi-Task Learning with Shared Memory

- intro: EMNLP 2016

- arxiv: https://arxiv.org/abs/1609.07222

Virtual Adversarial Training for Semi-Supervised Text Classification

Adversarial Training Methods for Semi-Supervised Text Classification

- arxiv: http://arxiv.org/abs/1605.07725

- notes: https://github.com/dennybritz/deeplearning-papernotes/blob/master/notes/adversarial-text-classification.md

Sentence Convolution Code in Torch: Text classification using a convolutional neural network

Bag of Tricks for Efficient Text Classification

- intro: Facebook AI Research

- arxiv: http://arxiv.org/abs/1607.01759

- github: https://github.com/kemaswill/fasttext_torch

- github: https://github.com/facebookresearch/fastText

Actionable and Political Text Classification using Word Embeddings and LSTM

Implementing a CNN for Text Classification in TensorFlow

fancy-cnn: Multiparadigm Sequential Convolutional Neural Networks for text classification

Convolutional Neural Networks for Text Categorization: Shallow Word-level vs. Deep Character-level

Tweet Classification using RNN and CNN

Hierarchical Attention Networks for Document Classification

- intro: CMU & MSR. NAACL 2016

- paper: https://www.cs.cmu.edu/~diyiy/docs/naacl16.pdf

- github(TensorFlow): https://github.com/raviqqe/tensorflow-font2char2word2sent2doc

- github(TensorFlow): https://github.com/ematvey/deep-text-classifier

AC-BLSTM: Asymmetric Convolutional Bidirectional LSTM Networks for Text Classification

- arxiv: https://arxiv.org/abs/1611.01884

- github(MXNet): https://github.com/Ldpe2G/AC-BLSTM

Generative and Discriminative Text Classification with Recurrent Neural Networks

- intro: DeepMind

- arxiv: https://arxiv.org/abs/1703.01898

Adversarial Multi-task Learning for Text Classification

- intro: ACL 2017

- arxiv: https://arxiv.org/abs/1704.05742

- data: http://nlp.fudan.edu.cn/data/

Deep Text Classification Can be Fooled

- intro: Renmin University of China

- arxiv: https://arxiv.org/abs/1704.08006

Deep neural network framework for multi-label text classification

Multi-Task Label Embedding for Text Classification

- intro: Shanghai Jiao Tong University

- arxiv: https://arxiv.org/abs/1710.07210

Text Clustering

Self-Taught Convolutional Neural Networks for Short Text Clustering

- intro: Chinese Academy of Sciences. accepted for publication in Neural Networks

- arxiv: https://arxiv.org/abs/1701.00185

- github: https://github.com/jacoxu/STC2

Alignment

Aligning Books and Movies: Towards Story-like Visual Explanations by Watching Movies and Reading Books

Dialog

Visual Dialog

- webiste: http://visualdialog.org/

- arxiv: https://arxiv.org/abs/1611.08669

- github: https://github.com/batra-mlp-lab/visdial-amt-chat

- github(Torch): https://github.com/batra-mlp-lab/visdial

- github(PyTorch): https://github.com/Cloud-CV/visual-chatbot

- demo: http://visualchatbot.cloudcv.org/

Papers, code and data from FAIR for various memory-augmented nets with application to text understanding and dialogue.

Neural Emoji Recommendation in Dialogue Systems

- intro: Tsinghua University & Baidu

- arxiv: https://arxiv.org/abs/1612.04609

Memory Networks

Neural Turing Machines

- paper: http://arxiv.org/abs/1410.5401

- Chs: http://www.jianshu.com/p/94dabe29a43b

- github: https://github.com/shawntan/neural-turing-machines

- github: https://github.com/DoctorTeeth/diffmem

- github: https://github.com/carpedm20/NTM-tensorflow

- blog: https://blog.aidangomez.ca/2016/05/16/The-Neural-Turing-Machine/

Memory Networks

- intro: Facebook AI Research

- arxiv: http://arxiv.org/abs/1410.3916

- github: https://github.com/npow/MemNN

End-To-End Memory Networks

- intro: Facebook AI Research

- intro: Continuous version of memory extraction via softmax. “Weakly supervised memory networks”

- arxiv: http://arxiv.org/abs/1503.08895

- github: https://github.com/facebook/MemNN

- github: https://github.com/vinhkhuc/MemN2N-babi-python

- github: https://github.com/npow/MemN2N

- github: https://github.com/domluna/memn2n

- github(Tensorflow): https://github.com/abhaikollara/MemN2N-Tensorflow

- video: http://research.microsoft.com/apps/video/default.aspx?id=259920&r=1

- video: http://pan.baidu.com/s/1pKiGLzP

Reinforcement Learning Neural Turing Machines - Revised

Learning to Transduce with Unbounded Memory

- intro: Google DeepMind

- arxiv: http://arxiv.org/abs/1506.02516

How to Code and Understand DeepMind’s Neural Stack Machine

- blog: https://iamtrask.github.io/2016/02/25/deepminds-neural-stack-machine/

- video tutorial: http://pan.baidu.com/s/1qX0EGDe

Ask Me Anything: Dynamic Memory Networks for Natural Language Processing

- intro: Memory networks implemented via rnns and gated recurrent units (GRUs).

- arxiv: http://arxiv.org/abs/1506.07285

- blog(“Implementing Dynamic memory networks”): http://yerevann.github.io//2016/02/05/implementing-dynamic-memory-networks/

- github(Python): https://github.com/swstarlab/DynamicMemoryNetworks

Ask Me Even More: Dynamic Memory Tensor Networks (Extended Model)

- intro: extensions for the Dynamic Memory Network (DMN)

- arxiv: https://arxiv.org/abs/1703.03939

- github: https://github.com/rgsachin/DMTN

Structured Memory for Neural Turing Machines

- intro: IBM Watson

- arxiv: http://arxiv.org/abs/1510.03931

Dynamic Memory Networks for Visual and Textual Question Answering

- intro: MetaMind 2016

- arxiv: http://arxiv.org/abs/1603.01417

- slides: http://slides.com/smerity/dmn-for-tqa-and-vqa-nvidia-gtc#/

- github: https://github.com/therne/dmn-tensorflow

- github(Theano): https://github.com/ethancaballero/Improved-Dynamic-Memory-Networks-DMN-plus

- review: https://www.technologyreview.com/s/600958/the-memory-trick-making-computers-seem-smarter/

- github(Tensorflow): https://github.com/DeepRNN/visual_question_answering

Neural GPUs Learn Algorithms

- arxiv: http://arxiv.org/abs/1511.08228

- github: https://github.com/tensorflow/models/tree/master/neural_gpu

- github: https://github.com/ikostrikov/torch-neural-gpu

- github: https://github.com/tristandeleu/neural-gpu

Hierarchical Memory Networks

Convolutional Residual Memory Networks

NTM-Lasagne: A Library for Neural Turing Machines in Lasagne

- github: https://github.com/snipsco/ntm-lasagne

- blog: https://medium.com/snips-ai/ntm-lasagne-a-library-for-neural-turing-machines-in-lasagne-2cdce6837315#.96pnh1m6j

Evolving Neural Turing Machines for Reward-based Learning

- homepage: http://sebastianrisi.com/entm/

- paper: http://sebastianrisi.com/wp-content/uploads/greve_gecco16.pdf

- code: https://www.dropbox.com/s/t019mwabw5nsnxf/neuralturingmachines-master.zip?dl=0

Hierarchical Memory Networks for Answer Selection on Unknown Words

- intro: COLING 2016

- arxiv: https://arxiv.org/abs/1609.08843

- github: https://github.com/jacoxu/HMN4QA

Gated End-to-End Memory Networks

Can Active Memory Replace Attention?

- intro: Google Brain

- arxiv: https://arxiv.org/abs/1610.08613

A Taxonomy for Neural Memory Networks

- intro: University of Florida

- arxiv: https://arxiv.org/abs/1805.00327

Papers

Globally Normalized Transition-Based Neural Networks

- intro: speech tagging, dependency parsing and sentence compression

- arxiv: http://arxiv.org/abs/1603.06042

- github(SyntaxNet): https://github.com/tensorflow/models/tree/master/syntaxnet

A Decomposable Attention Model for Natural Language Inference

- intro: EMNLP 2016

- arxiv: http://arxiv.org/abs/1606.01933

- github(Keras+spaCy): https://github.com/explosion/spaCy/tree/master/examples/keras_parikh_entailment

Improving Recurrent Neural Networks For Sequence Labelling

Recurrent Memory Networks for Language Modeling

- arixv: http://arxiv.org/abs/1601.01272

- github: https://github.com/ketranm/RMN

Tweet2Vec: Learning Tweet Embeddings Using Character-level CNN-LSTM Encoder-Decoder

- intro: MIT Media Lab

- arixv: http://arxiv.org/abs/1607.07514

Learning text representation using recurrent convolutional neural network with highway layers

- intro: Neu-IR ‘16 SIGIR Workshop on Neural Information Retrieval

- arxiv: http://arxiv.org/abs/1606.06905

- github: https://github.com/wenying45/deep_learning_tutorial/tree/master/rcnn-hw

Ask the GRU: Multi-task Learning for Deep Text Recommendations

From phonemes to images: levels of representation in a recurrent neural model of visually-grounded language learning

- intro: COLING 2016

- arxiv: https://arxiv.org/abs/1610.03342

Visualizing Linguistic Shift

A Joint Many-Task Model: Growing a Neural Network for Multiple NLP Tasks

- intro: The University of Tokyo & Salesforce Research

- arxiv: https://arxiv.org/abs/1611.01587

Deep Learning applied to NLP

https://arxiv.org/abs/1703.03091

Attention Is All You Need

- intro: Google Brain & Google Research & University of Toronto

- intro: Just attention + positional encoding = state of the art

- arxiv: https://arxiv.org/abs/1706.03762

- github(Chainer): https://github.com/soskek/attention_is_all_you_need

Recent Trends in Deep Learning Based Natural Language Processing

- intro: Beijing Institute of Technology & National University of Singapore & Nanyang Technological University

- arxiv: https://arxiv.org/abs/1708.02709

HotFlip: White-Box Adversarial Examples for NLP

- intro: University of Oregon & Nanjing University

- arxiv: https://arxiv.org/abs/1712.06751

No Metrics Are Perfect: Adversarial Reward Learning for Visual Storytelling

- intro: ACL 2018

- arxiv: https://arxiv.org/abs/1804.09160

Interesting Applications

Data-driven HR - Résumé Analysis Based on Natural Language Processing and Machine Learning

sk_p: a neural program corrector for MOOCs

- intro: MIT

- intro: Using seq2seq to fix buggy code submissions in MOOCs

- arxiv: http://arxiv.org/abs/1607.02902

Neural Generation of Regular Expressions from Natural Language with Minimal Domain Knowledge

- intro: EMNLP 2016

- intro: translating natural language queries into regular expressions which embody their meaning

- arxiv: http://arxiv.org/abs/1608.03000

emoji2vec: Learning Emoji Representations from their Description

- intro: EMNLP 2016

- arxiv: http://arxiv.org/abs/1609.08359

Inside-Outside and Forward-Backward Algorithms Are Just Backprop (Tutorial Paper)

Cruciform: Solving Crosswords with Natural Language Processing

Smart Reply: Automated Response Suggestion for Email

- intro: Google. KDD 2016

- arxiv: https://arxiv.org/abs/1606.04870

- notes: https://blog.acolyer.org/2016/11/24/smart-reply-automated-response-suggestion-for-email/

Deep Learning for RegEx

- intro: a winning submission of Extraction of product attribute values competition (CrowdAnalytix)

- blog: http://dlacombejr.github.io/2016/11/13/deep-learning-for-regex.html

Learning Python Code Suggestion with a Sparse Pointer Network

- intro: Learning to Auto-Complete using RNN Language Models

- intro: University College London

- arxiv: https://arxiv.org/abs/1611.08307

- github: https://github.com/uclmr/pycodesuggest

End-to-End Prediction of Buffer Overruns from Raw Source Code via Neural Memory Networks

https://arxiv.org/abs/1703.02458

Convolutional Sequence to Sequence Learning

- arxiv: https://arxiv.org/abs/1705.03122

- paper: https://s3.amazonaws.com/fairseq/papers/convolutional-sequence-to-sequence-learning.pdf

- github: https://github.com/facebookresearch/fairseq

DeepFix: Fixing Common C Language Errors by Deep Learning

- intro: AAAI 2017. Indian Institute of Science

- project page: http://www.iisc-seal.net/deepfix

- paper: https://www.aaai.org/ocs/index.php/AAAI/AAAI17/paper/view/14603/13921

- bitbucket: https://bitbucket.org/iiscseal/deepfix

Hierarchically-Attentive RNN for Album Summarization and Storytelling

- intro: EMNLP 2017. UNC Chapel Hill

- arxiv: https://arxiv.org/abs/1708.02977

Project

TheanoLM - An Extensible Toolkit for Neural Network Language Modeling

NLP-Caffe: natural language processing with Caffe

DL4NLP: Deep Learning for Natural Language Processing

- github: https://github.com/nokuno/dl4nlp

Combining CNN and RNN for spoken language identification

- blog: http://yerevann.github.io//2016/06/26/combining-cnn-and-rnn-for-spoken-language-identification/

- github: https://github.com/YerevaNN/Spoken-language-identification/tree/master/theano

Character-Aware Neural Language Models: LSTM language model with CNN over characters in TensorFlow

Neural Relation Extraction with Selective Attention over Instances

- paper: http://nlp.csai.tsinghua.edu.cn/~lzy/publications/acl2016_nre.pdf

- github: https://github.com/thunlp/NRE

deep-simplification: Text simplification using RNNs

- intro: achieves a BLEU score of 61.14

- github: https://github.com/mbartoli/deep-simplification

lamtram: A toolkit for language and translation modeling using neural networks

Lango: Language Lego

- intro: Lango is a natural language processing library for working with the building blocks of language.

- github: https://github.com/ayoungprogrammer/Lango

Sequence-to-Sequence Learning with Attentional Neural Networks

- github(Torch): https://github.com/harvardnlp/seq2seq-attn

harvardnlp code

- intro: pen-source implementations of popular deep learning techniques with applications to NLP

- homepage: http://nlp.seas.harvard.edu/code/

Seq2seq: Sequence to Sequence Learning with Keras

debug seq2seq

Recurrent & convolutional neural network modules

- intro: This repo contains Theano implementations of popular neural network components and optimization methods.

- github: https://github.com/taolei87/rcnn

Datasets

Datasets for Natural Language Processing

Blogs

How to read: Character level deep learning

Heavy Metal and Natural Language Processing

- part 1: http://www.degeneratestate.org/posts/2016/Apr/20/heavy-metal-and-natural-language-processing-part-1/

Sequence To Sequence Attention Models In PyCNN

https://talbaumel.github.io/Neural+Attention+Mechanism.html

Source Code Classification Using Deep Learning

http://blog.aylien.com/source-code-classification-using-deep-learning/

My Process for Learning Natural Language Processing with Deep Learning

Convolutional Methods for Text

https://medium.com/@TalPerry/convolutional-methods-for-text-d5260fd5675f

Word2Vec

Word2Vec Tutorial - The Skip-Gram Model

http://mccormickml.com/2016/04/19/word2vec-tutorial-the-skip-gram-model/

Word2Vec Tutorial Part 2 - Negative Sampling

http://mccormickml.com/2017/01/11/word2vec-tutorial-part-2-negative-sampling/

Word2Vec Resources

http://mccormickml.com/2016/04/27/word2vec-resources/

Demos

AskImage.org - Deep Learning for Answering Questions about Images

- homepage: http://www.askimage.org/

Talks / Videos

Navigating Natural Language Using Reinforcement Learning

Resources

So, you need to understand language data? Open-source NLP software can help!

- blog: http://entopix.com/so-you-need-to-understand-language-data-open-source-nlp-software-can-help.html

Curated list of resources on building bots

Notes for deep learning on NLP

https://medium.com/@frank_chung/notes-for-deep-learning-on-nlp-94ddfcb45723#.iouo0v7m7