RNN and LSTM

Types of RNN

1) Plain Tanh Recurrent Nerual Networks

2) Gated Recurrent Neural Networks (GRU)

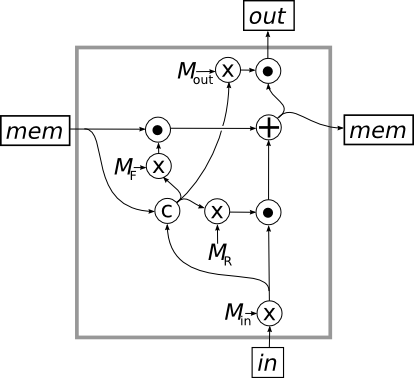

3) Long Short-Term Memory (LSTM)

Tutorials

The Unreasonable Effectiveness of Recurrent Neural Networks

Understanding LSTM Networks

- blog: http://colah.github.io/posts/2015-08-Understanding-LSTMs/

- blog(zh): http://www.jianshu.com/p/9dc9f41f0b29

A Beginner’s Guide to Recurrent Networks and LSTMs

http://deeplearning4j.org/lstm.html

A Deep Dive into Recurrent Neural Nets

http://nikhilbuduma.com/2015/01/11/a-deep-dive-into-recurrent-neural-networks/

Exploring LSTMs

http://blog.echen.me/2017/05/30/exploring-lstms/

A tutorial on training recurrent neural networks, covering BPPT, RTRL, EKF and the “echo state network” approach

- paper: http://minds.jacobs-university.de/sites/default/files/uploads/papers/ESNTutorialRev.pdf

- slides: http://deeplearning.cs.cmu.edu/notes/shaoweiwang.pdf

Long Short-Term Memory: Tutorial on LSTM Recurrent Networks

http://people.idsia.ch/~juergen/lstm/index.htm

LSTM implementation explained

http://apaszke.github.io/lstm-explained.html

Recurrent Neural Networks Tutorial

- Part 1(Introduction to RNNs): http://www.wildml.com/2015/09/recurrent-neural-networks-tutorial-part-1-introduction-to-rnns/

- Part 2(Implementing a RNN using Python and Theano): http://www.wildml.com/2015/09/recurrent-neural-networks-tutorial-part-2-implementing-a-language-model-rnn-with-python-numpy-and-theano/

- Part 3(Understanding the Backpropagation Through Time (BPTT) algorithm): http://www.wildml.com/2015/10/recurrent-neural-networks-tutorial-part-3-backpropagation-through-time-and-vanishing-gradients/

- Part 4(Implementing a GRU/LSTM RNN): http://www.wildml.com/2015/10/recurrent-neural-network-tutorial-part-4-implementing-a-grulstm-rnn-with-python-and-theano/

Recurrent Neural Networks in DL4J

http://deeplearning4j.org/usingrnns.html

Learning RNN Hierarchies

Element-Research Torch RNN Tutorial for recurrent neural nets : let’s predict time series with a laptop GPU

- blog: https://christopher5106.github.io/deep/learning/2016/07/14/element-research-torch-rnn-tutorial.html

RNNs in Tensorflow, a Practical Guide and Undocumented Features

Learning about LSTMs using Torch

Build a Neural Network (LIVE)

- intro: LSTM

- youtube: https://www.youtube.com/watch?v=KvoZU-ItDiE

- mirror: https://pan.baidu.com/s/1i4KoumL

- github: https://github.com/llSourcell/build_a_neural_net_live

Deriving LSTM Gradient for Backpropagation

http://wiseodd.github.io/techblog/2016/08/12/lstm-backprop/

TensorFlow RNN Tutorial

https://svds.com/tensorflow-rnn-tutorial/

RNN Training Tips and Tricks

https://github.com/karpathy/char-rnn#tips-and-tricks

Tips for Training Recurrent Neural Networks

http://danijar.com/tips-for-training-recurrent-neural-networks/

A Tour of Recurrent Neural Network Algorithms for Deep Learning

http://machinelearningmastery.com/recurrent-neural-network-algorithms-for-deep-learning/

Fundamentals of Deep Learning – Introduction to Recurrent Neural Networks

https://www.analyticsvidhya.com/blog/2017/12/introduction-to-recurrent-neural-networks/

Essentials of Deep Learning : Introduction to Long Short Term Memory

https://www.analyticsvidhya.com/blog/2017/12/fundamentals-of-deep-learning-introduction-to-lstm/

How to build a Recurrent Neural Network in TensorFlow

How to build a Recurrent Neural Network in TensorFlow (1/7)

https://medium.com/@erikhallstrm/hello-world-rnn-83cd7105b767#.2vozogqf7

Using the RNN API in TensorFlow (2/7)

https://medium.com/@erikhallstrm/tensorflow-rnn-api-2bb31821b185#.h0ycrjuo3

Using the LSTM API in TensorFlow (3/7)

https://medium.com/@erikhallstrm/using-the-tensorflow-lstm-api-3-7-5f2b97ca6b73#.k7aciqaxn

Using the Multilayered LSTM API in TensorFlow (4/7)

https://medium.com/@erikhallstrm/using-the-tensorflow-multilayered-lstm-api-f6e7da7bbe40#.dj7dy92m5

Using the DynamicRNN API in TensorFlow (5/7)

https://medium.com/@erikhallstrm/using-the-dynamicrnn-api-in-tensorflow-7237aba7f7ea#.49qw259ks

Using the Dropout API in TensorFlow (6/7)

https://medium.com/@erikhallstrm/using-the-dropout-api-in-tensorflow-2b2e6561dfeb#.a7mc3o9aq

Unfolding RNNs

Unfolding RNNs: RNN : Concepts and Architectures

Unfolding RNNs II: Vanilla, GRU, LSTM RNNs from scratch in Tensorflow

- blog: http://suriyadeepan.github.io/2017-02-13-unfolding-rnn-2/

- github: https://github.com/suriyadeepan/rnn-from-scratch

Train RNN

On the difficulty of training Recurrent Neural Networks

- author: Razvan Pascanu, Tomas Mikolov, Yoshua Bengio

- arxiv: http://arxiv.org/abs/1211.5063

- video talks: http://techtalks.tv/talks/on-the-difficulty-of-training-recurrent-neural-networks/58134/

A Simple Way to Initialize Recurrent Networks of Rectified Linear Units

- arxiv: http://arxiv.org/abs/1504.00941

- gitxiv: http://gitxiv.com/posts/7j5JXvP3kn5Jf8Waj/irnn-experiment-with-pixel-by-pixel-sequential-mnist

- github: https://github.com/fchollet/keras/blob/master/examples/mnist_irnn.py

- github: https://gist.github.com/GabrielPereyra/353499f2e6e407883b32

- blog(“Implementing Recurrent Neural Net using chainer!”): http://t-satoshi.blogspot.jp/2015/06/implementing-recurrent-neural-net-using.html

- reddit: https://www.reddit.com/r/MachineLearning/comments/31rinf/150400941_a_simple_way_to_initialize_recurrent/

- reddit: https://www.reddit.com/r/MachineLearning/comments/32tgvw/has_anyone_been_able_to_reproduce_the_results_in/

Batch Normalized Recurrent Neural Networks

Sequence Level Training with Recurrent Neural Networks

- intro: ICLR 2016

- arxiv: http://arxiv.org/abs/1511.06732

- github: https://github.com/facebookresearch/MIXER

- notes: https://www.evernote.com/shard/s189/sh/ada01a82-70a9-48d4-985c-20492ab91e84/8da92be19e704996dc2b929473abed46

Training Recurrent Neural Networks (PhD thesis)

- atuhor: Ilya Sutskever

- thesis: https://www.cs.utoronto.ca/~ilya/pubs/ilya_sutskever_phd_thesis.pdf

Deep learning for control using augmented Hessian-free optimization

- blog: https://studywolf.wordpress.com/2016/04/04/deep-learning-for-control-using-augmented-hessian-free-optimization/

- github: https://github.com/studywolf/blog/blob/master/train_AHF/train_hf.py

Hierarchical Conflict Propagation: Sequence Learning in a Recurrent Deep Neural Network

Recurrent Batch Normalization

- arxiv: http://arxiv.org/abs/1603.09025

- github: https://github.com/iassael/torch-bnlstm

- github: https://github.com/cooijmanstim/recurrent-batch-normalization

- github(“LSTM with Batch Normalization”): https://github.com/fchollet/keras/pull/2183

- github: https://github.com/jihunchoi/recurrent-batch-normalization-pytorch

- notes: http://www.shortscience.org/paper?bibtexKey=journals/corr/CooijmansBLC16

Batch normalized LSTM for Tensorflow

- blog: http://olavnymoen.com/2016/07/07/rnn-batch-normalization

- github: https://github.com/OlavHN/bnlstm

Optimizing Performance of Recurrent Neural Networks on GPUs

- arxiv: http://arxiv.org/abs/1604.01946

- github: https://github.com/parallel-forall/code-samples/blob/master/posts/rnn/LSTM.cu

Path-Normalized Optimization of Recurrent Neural Networks with ReLU Activations

Explaining and illustrating orthogonal initialization for recurrent neural networks

Professor Forcing: A New Algorithm for Training Recurrent Networks

- intro: NIPS 2016

- arxiv: https://arxiv.org/abs/1610.09038

- github: https://github.com/anirudh9119/LM_GANS

Phased LSTM: Accelerating Recurrent Network Training for Long or Event-based Sequences

- intro: Selected for an oral presentation at NIPS, 2016. University of Zurich and ETH Zurich

- arxiv: https://arxiv.org/abs/1610.09513

- github: https://github.com/dannyneil/public_plstm

- github: https://github.com/Enny1991/PLSTM

- github: https://github.com/philipperemy/tensorflow-phased-lstm

- github: https://www.tensorflow.org/api_docs/python/tf/contrib/rnn/PhasedLSTMCell

- reddit: https://www.reddit.com/r/MachineLearning/comments/5bmfw5/r_phased_lstm_accelerating_recurrent_network/

Tuning Recurrent Neural Networks with Reinforcement Learning (RL Tuner)

- paper: http://openreview.net/pdf?id=BJ8fyHceg

- blog: https://magenta.tensorflow.org/2016/11/09/tuning-recurrent-networks-with-reinforcement-learning/

- github: https://github.com/tensorflow/magenta/tree/master/magenta/models/rl_tuner

Capacity and Trainability in Recurrent Neural Networks

- intro: Google Brain

- arxiv: https://arxiv.org/abs/1611.09913

Large-Batch Training for LSTM and Beyond

- intro: UC Berkeley & UCLA & Google

- paper: https://www2.eecs.berkeley.edu/Pubs/TechRpts/2018/EECS-2018-138.pdf

Learn To Execute Programs

Learning to Execute

- arxiv: http://arxiv.org/abs/1410.4615

- github: https://github.com/wojciechz/learning_to_execute

- github(Tensorflow): https://github.com/raindeer/seq2seq_experiments

Neural Programmer-Interpreters

- intro: Google DeepMind. ICLR 2016 Best Paper

- arxiv: http://arxiv.org/abs/1511.06279

- project page: http://www-personal.umic (Google DeepMind. ICLR 2016 Best Paper)h.edu/~reedscot/iclr_project.html

- github: https://github.com/mokemokechicken/keras_npi

A Programmer-Interpreter Neural Network Architecture for Prefrontal Cognitive Control

Convolutional RNN: an Enhanced Model for Extracting Features from Sequential Data

Neural Random-Access Machines

Attention Models

Recurrent Models of Visual Attention

- intro: Google DeepMind. NIPS 2014

- arxiv: http://arxiv.org/abs/1406.6247

- data: https://github.com/deepmind/mnist-cluttered

- github: https://github.com/Element-Research/rnn/blob/master/examples/recurrent-visual-attention.lua

Recurrent Model of Visual Attention

- intro: Google DeepMind

- paper: http://arxiv.org/abs/1406.6247

- gitxiv: http://gitxiv.com/posts/ZEobCXSh23DE8a8mo/recurrent-models-of-visual-attention

- blog: http://torch.ch/blog/2015/09/21/rmva.html

- github: https://github.com/Element-Research/rnn/blob/master/scripts/evaluate-rva.lua

Show, Attend and Tell: Neural Image Caption Generation with Visual Attention

A Neural Attention Model for Abstractive Sentence Summarization

- intro: EMNLP 2015. Facebook AI Research

- arxiv: http://arxiv.org/abs/1509.00685

- github: https://github.com/facebook/NAMAS

Effective Approaches to Attention-based Neural Machine Translation

- intro: EMNLP 2015

- paper: http://nlp.stanford.edu/pubs/emnlp15_attn.pdf

- project: http://nlp.stanford.edu/projects/nmt/

- github: https://github.com/lmthang/nmt.matlab

Generating Images from Captions with Attention

- arxiv: http://arxiv.org/abs/1511.02793

- github: https://github.com/emansim/text2image

- demo: http://www.cs.toronto.edu/~emansim/cap2im.html

Attention and Memory in Deep Learning and NLP

Survey on the attention based RNN model and its applications in computer vision

Attention in Long Short-Term Memory Recurrent Neural Networks

How to Visualize Your Recurrent Neural Network with Attention in Keras

- blog: https://medium.com/datalogue/attention-in-keras-1892773a4f22

- github: https://github.com/datalogue/keras-attention

Papers

Generating Sequences With Recurrent Neural Networks

- arxiv: http://arxiv.org/abs/1308.0850

- github: https://github.com/hardmaru/write-rnn-tensorflow

- github: https://github.com/szcom/rnnlib

- blog: http://blog.otoro.net/2015/12/12/handwriting-generation-demo-in-tensorflow/

A Clockwork RNN

- arxiv: https://arxiv.org/abs/1402.3511

- github: https://github.com/makistsantekidis/clockworkrnn

- github: https://github.com/zergylord/ClockworkRNN

Unsupervised Learning of Video Representations using LSTMs

- intro: ICML 2015

- project page: http://www.cs.toronto.edu/~nitish/unsupervised_video/

- arxiv: http://arxiv.org/abs/1502.04681

- code: http://www.cs.toronto.edu/~nitish/unsupervised_video/unsup_video_lstm.tar.gz

- github: https://github.com/emansim/unsupervised-videos

An Empirical Exploration of Recurrent Network Architectures

Improved Semantic Representations From Tree-Structured Long Short-Term Memory Networks

- intro: ACL 2015. Tree RNNs aka Recursive Neural Networks

- arxiv: https://arxiv.org/abs/1503.00075

- slides: http://lit.eecs.umich.edu/wp-content/uploads/2015/10/tree-lstms.pptx

- gitxiv: http://www.gitxiv.com/posts/esrArT2iLmSfNRrto/tree-structured-long-short-term-memory-networks

- github: https://github.com/stanfordnlp/treelstm

- github: https://github.com/ofirnachum/tree_rnn

LSTM: A Search Space Odyssey

- arxiv: http://arxiv.org/abs/1503.04069

- notes: https://www.evernote.com/shard/s189/sh/48da42c5-8106-4f0d-b835-c203466bfac4/50d7a3c9a961aefd937fae3eebc6f540

- blog(“Dissecting the LSTM”): https://medium.com/jim-fleming/implementing-lstm-a-search-space-odyssey-7d50c3bacf93#.crg8pztop

- github: https://github.com/jimfleming/lstm_search

Inferring Algorithmic Patterns with Stack-Augmented Recurrent Nets

A Critical Review of Recurrent Neural Networks for Sequence Learning

- arxiv: http://arxiv.org/abs/1506.00019

- review: http://blog.terminal.com/a-thorough-and-readable-review-on-rnns/

Visualizing and Understanding Recurrent Networks

- intro: ICLR 2016. Andrej Karpathy, Justin Johnson, Fei-Fei Li

- arxiv: http://arxiv.org/abs/1506.02078

- slides: http://www.robots.ox.ac.uk/~seminars/seminars/Extra/2015_07_06_AndrejKarpathy.pdf

- github: https://github.com/karpathy/char-rnn

Scheduled Sampling for Sequence Prediction with Recurrent Neural Networks

- intro: Winner of MSCOCO image captioning challenge, 2015

- arxiv: http://arxiv.org/abs/1506.03099

Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting

- arxiv: https://arxiv.org/abs/1506.04214

- github: https://github.com/loliverhennigh/Convolutional-LSTM-in-Tensorflow

Grid Long Short-Term Memory

- arxiv: http://arxiv.org/abs/1507.01526

- github(Torch7): https://github.com/coreylynch/grid-lstm/

Depth-Gated LSTM

- arxiv: http://arxiv.org/abs/1508.03790

- github: GitHub(dglstm.h+dglstm.cc)

Deep Knowledge Tracing

- paper: https://web.stanford.edu/~cpiech/bio/papers/deepKnowledgeTracing.pdf

- github: https://github.com/chrispiech/DeepKnowledgeTracing

Top-down Tree Long Short-Term Memory Networks

Improving performance of recurrent neural network with relu nonlinearity

- intro: ICLR 2016

- arxiv: https://arxiv.org/abs/1511.03771

Alternative structures for character-level RNNs

- intro: INRIA & Facebook AI Research. ICLR 2016

- arxiv: http://arxiv.org/abs/1511.06303

- github: https://github.com/facebook/Conditional-character-based-RNN

Long Short-Term Memory-Networks for Machine Reading

Lipreading with Long Short-Term Memory

Associative Long Short-Term Memory

Representation of linguistic form and function in recurrent neural networks

Architectural Complexity Measures of Recurrent Neural Networks

Easy-First Dependency Parsing with Hierarchical Tree LSTMs

Training Input-Output Recurrent Neural Networks through Spectral Methods

Sequential Neural Models with Stochastic Layers

Neural networks with differentiable structure

What You Get Is What You See: A Visual Markup Decompiler

- project page: http://lstm.seas.harvard.edu/latex/

- arxiv: http://arxiv.org/abs/1609.04938

- github: https://github.com/harvardnlp/im2markup

- github(Tensorflow): https://github.com/ssampang/im2latex

- github: https://github.com/opennmt/im2text

- github: https://github.com/ritheshkumar95/im2latex-tensorflow

Hybrid computing using a neural network with dynamic external memory

- intro: Nature 2016

- keywords: Differentiable Neural Computer (DNC) https://www.nature.com/articles/nature20101.epdf?author_access_token=ImTXBI8aWbYxYQ51Plys8NRgN0jAjWel9jnR3ZoTv0MggmpDmwljGswxVdeocYSurJ3hxupzWuRNeGvvXnoO8o4jTJcnAyhGuZzXJ1GEaD-Z7E6X_a9R-xqJ9TfJWBqz

- github: https://github.com/deepmind/dnc

Skip RNN: Learning to Skip State Updates in Recurrent Neural Networks

- project page: https://imatge-upc.github.io/skiprnn-2017-telecombcn/

- arxiv: https://arxiv.org/abs/1708.06834

Dilated Recurrent Neural Networks

- intro: NIPS 2017. IBM & University of Illinois at Urbana-Champaign

- keywords: DilatedRNN

- arxiv: https://arxiv.org/abs/1710.02224

- github(Tensorflow): https://github.com/code-terminator/DilatedRNN https://github.com/zalandoresearch/pt-dilate-rnn

Excitation Backprop for RNNs

https://arxiv.org/abs/1711.06778

Recurrent Relational Networks for Complex Relational Reasoning

- project page: https://rasmusbergpalm.github.io/recurrent-relational-networks/

- arxiv: https://arxiv.org/abs/1711.08028

- github: https://github.com//rasmusbergpalm/recurrent-relational-networks

Learning Compact Recurrent Neural Networks with Block-Term Tensor Decomposition

- intro: University of Electronic Science and Technology of China & Brown University & University of Utah & XJERA LABS PTE.LTD

- arxiv: https://arxiv.org/abs/1712.05134

LSTMVis

Visual Analysis of Hidden State Dynamics in Recurrent Neural Networks

- homepage: http://lstm.seas.harvard.edu/

- demo: http://lstm.seas.harvard.edu/client/index.html

- arxiv: https://arxiv.org/abs/1606.07461

- github: https://github.com/HendrikStrobelt/LSTMVis

Recurrent Memory Array Structures

Recurrent Highway Networks

- author: Julian Georg Zilly, Rupesh Kumar Srivastava, Jan Koutník, Jürgen Schmidhuber

- arxiv: http://arxiv.org/abs/1607.03474

- github(Tensorflow+Torch): https://github.com/julian121266/RecurrentHighwayNetworks/

DeepSoft: A vision for a deep model of software

Recurrent Neural Networks With Limited Numerical Precision

Hierarchical Multiscale Recurrent Neural Networks

- arxiv: http://arxiv.org/abs/1609.01704

- notes: https://github.com/dennybritz/deeplearning-papernotes/blob/master/notes/hm-rnn.md

- notes: https://medium.com/@jimfleming/notes-on-hierarchical-multiscale-recurrent-neural-networks-7362532f3b64#.pag4kund0

LightRNN

LightRNN: Memory and Computation-Efficient Recurrent Neural Networks

- intro: NIPS 2016

- arxiv: https://arxiv.org/abs/1610.09893

Full-Capacity Unitary Recurrent Neural Networks

- intro: NIPS 2016

- arxiv: https://arxiv.org/abs/1611.00035

- github: https://github.com/stwisdom/urnn

DeepCoder: Learning to Write Programs

shuttleNet: A biologically-inspired RNN with loop connection and parameter sharing

Tracking the World State with Recurrent Entity Networks

- intro: Facebook AI Research

- arxiv: https://arxiv.org/abs/1612.03969

- github(Official): https://github.com/facebook/MemNN/tree/master/EntNet-babi

Robust LSTM-Autoencoders for Face De-Occlusion in the Wild

- intro: National University of Singapore & Peking University

- arxiv: https://arxiv.org/abs/1612.08534

Simplified Gating in Long Short-term Memory (LSTM) Recurrent Neural Networks

The Statistical Recurrent Unit

- intro: CMU

- arxiv: https://arxiv.org/abs/1703.00381

Factorization tricks for LSTM networks

- intro: ICLR 2017 Workshop

- arxiv: https://arxiv.org/abs/1703.10722

- github: https://github.com/okuchaiev/f-lm

Bayesian Recurrent Neural Networks

- intro: UC Berkeley

- arxiv: https://arxiv.org/abs/1704.02798

- github: https://github.com/mirceamironenco/BayesianRecurrentNN

Fast-Slow Recurrent Neural Networks

Visualizing LSTM decisions

https://arxiv.org/abs/1705.08153

Recurrent Additive Networks

- intro: [University of Washington & Allen Institute for Artificial Intelligence

- arxiv: https://arxiv.org/abs/1705.07393

- paper: http://www.kentonl.com/pub/llz.2017.pdf

- github(PyTorch): https://github.com/bheinzerling/ran

Recent Advances in Recurrent Neural Networks

- intro: University of Toronto & University of Waterloo

- arxiv: https://arxiv.org/abs/1801.01078

Grow and Prune Compact, Fast, and Accurate LSTMs

https://arxiv.org/abs/1805.11797

Projects

NeuralTalk (Deprecated): a Python+numpy project for learning Multimodal Recurrent Neural Networks that describe images with sentences

NeuralTalk2: Efficient Image Captioning code in Torch, runs on GPU

char-rnn in Blocks

Project: pycaffe-recurrent

Using neural networks for password cracking

- blog: https://0day.work/using-neural-networks-for-password-cracking/

- github: https://github.com/gehaxelt/RNN-Passwords

torch-rnn: Efficient, reusable RNNs and LSTMs for torch

Deploying a model trained with GPU in Torch into JavaScript, for everyone to use

- blog: http://testuggine.ninja/blog/torch-conversion

- demo: http://testuggine.ninja/DRUMPF-9000/

- github: https://github.com/Darktex/char-rnn

LSTM implementation on Caffe

JNN: Java Neural Network Library

- intro: C2W model, LSTM-based Language Model, LSTM-based Part-Of-Speech-Tagger Model

- github: https://github.com/wlin12/JNN

LSTM-Autoencoder: Seq2Seq LSTM Autoencoder

RNN Language Model Variations

- intro: Standard LSTM, Gated Feedback LSTM, 1D-Grid LSTM

- github: https://github.com/cheng6076/mlm

keras-extra: Extra Layers for Keras to connect CNN with RNN

Dynamic Vanilla RNN, GRU, LSTM,2layer Stacked LSTM with Tensorflow Higher Order Ops

PRNN: A fast implementation of recurrent neural network layers in CUDA

- intro: Baidu Research

- blog: https://svail.github.io/persistent_rnns/

- github: https://github.com/baidu-research/persistent-rnn

min-char-rnn: Minimal character-level language model with a Vanilla Recurrent Neural Network, in Python/numpy

rnn: Recurrent Neural Network library for Torch7’s nn

word-rnn-tensorflow: Multi-layer Recurrent Neural Networks (LSTM, RNN) for word-level language models in Python using TensorFlow

tf-char-rnn: Tensorflow implementation of char-rnn

translit-rnn: Automatic transliteration with LSTM

- blog: http://yerevann.github.io/2016/09/09/automatic-transliteration-with-lstm/

- github: https://github.com/YerevaNN/translit-rnn

tf_lstm.py: Simple implementation of LSTM in Tensorflow in 50 lines (+ 130 lines of data generation and comments)

Handwriting generating with RNN

- github: https://github.com/Arn-O/kadenze-deep-creative-apps/blob/master/final-project/glyphs-rnn.ipynb

RecNet - Recurrent Neural Network Framework

Blogs

Survey on Attention-based Models Applied in NLP

http://yanran.li/peppypapers/2015/10/07/survey-attention-model-1.html

Survey on Advanced Attention-based Models

http://yanran.li/peppypapers/2015/10/07/survey-attention-model-2.html

Online Representation Learning in Recurrent Neural Language Models

http://www.marekrei.com/blog/online-representation-learning-in-recurrent-neural-language-models/

Fun with Recurrent Neural Nets: One More Dive into CNTK and TensorFlow

Materials to understand LSTM

https://medium.com/@shiyan/materials-to-understand-lstm-34387d6454c1#.4mt3bzoau

Understanding LSTM and its diagrams

:star::star::star::star::star:

- blog: https://medium.com/@shiyan/understanding-lstm-and-its-diagrams-37e2f46f1714

- slides: https://github.com/shi-yan/FreeWill/blob/master/Docs/Diagrams/lstm_diagram.pptx

Persistent RNNs: 30 times faster RNN layers at small mini-batch sizes

Persistent RNNs: Stashing Recurrent Weights On-Chip

- intro: Greg Diamos, Baidu Silicon Valley AI Lab

- paper: http://jmlr.org/proceedings/papers/v48/diamos16.pdf

- blog: http://svail.github.io/persistent_rnns/

- slides: http://on-demand.gputechconf.com/gtc/2016/presentation/s6673-greg-diamos-persisten-rnns.pdf

All of Recurrent Neural Networks

https://medium.com/@jianqiangma/all-about-recurrent-neural-networks-9e5ae2936f6e#.q4s02elqg

Rolling and Unrolling RNNs

https://shapeofdata.wordpress.com/2016/04/27/rolling-and-unrolling-rnns/

Sequence prediction using recurrent neural networks(LSTM) with TensorFlow: LSTM regression using TensorFlow

- blog: http://mourafiq.com/2016/05/15/predicting-sequences-using-rnn-in-tensorflow.html

- github: https://github.com/mouradmourafiq/tensorflow-lstm-regression

LSTMs

Machines and Magic: Teaching Computers to Write Harry Potter

- blog: https://medium.com/@joycex99/machines-and-magic-teaching-computers-to-write-harry-potter-37839954f252#.4fxemal9t

- github: https://github.com/joycex99/hp-word-model

Crash Course in Recurrent Neural Networks for Deep Learning

http://machinelearningmastery.com/crash-course-recurrent-neural-networks-deep-learning/

Understanding Stateful LSTM Recurrent Neural Networks in Python with Keras

Recurrent Neural Networks in Tensorflow

- part I: http://r2rt.com/recurrent-neural-networks-in-tensorflow-i.html

- part II: http://r2rt.com/recurrent-neural-networks-in-tensorflow-ii.html

Written Memories: Understanding, Deriving and Extending the LSTM

http://r2rt.com/written-memories-understanding-deriving-and-extending-the-lstm.html

Attention and Augmented Recurrent Neural Networks

- blog: http://distill.pub/2016/augmented-rnns/

- github: https://github.com/distillpub/post–augmented-rnns

Interpreting and Visualizing Neural Networks for Text Processing

https://civisanalytics.com/blog/data-science/2016/09/22/neural-network-visualization/

A simple design pattern for recurrent deep learning in TensorFlow

- blog: https://medium.com/@devnag/a-simple-design-pattern-for-recurrent-deep-learning-in-tensorflow-37aba4e2fd6b#.homq9zsyr

- github: https://github.com/devnag/tensorflow-bptt

RNN Spelling Correction: To crack a nut with a sledgehammer

Recurrent Neural Network Gradients, and Lessons Learned Therein

- blog: http://willwolf.io/en/2016/10/13/recurrent-neural-network-gradients-and-lessons-learned-therein/

A noob’s guide to implementing RNN-LSTM using Tensorflow

http://monik.in/a-noobs-guide-to-implementing-rnn-lstm-using-tensorflow/

Non-Zero Initial States for Recurrent Neural Networks

Interpreting neurons in an LSTM network

http://yerevann.github.io/2017/06/27/interpreting-neurons-in-an-LSTM-network/

Optimizing RNN (Baidu Silicon Valley AI Lab)

Optimizing RNN performance

Optimizing RNNs with Differentiable Graphs

- blog: http://svail.github.io/diff_graphs/

- notes: http://research.baidu.com/svail-tech-notes-optimizing-rnns-differentiable-graphs/

Resources

Awesome Recurrent Neural Networks - A curated list of resources dedicated to RNN

- homepage: http://jiwonkim.org/awesome-rnn/

- github: https://github.com/kjw0612/awesome-rnn

Jürgen Schmidhuber’s page on Recurrent Neural Networks

http://people.idsia.ch/~juergen/rnn.html

Reading and Questions

Are there any Recurrent convolutional neural network network implementations out there ?